Are your marketing campaigns truly driving new growth, or would those conversions have happened anyway? In the age of data-driven marketing, this question is crucial. The answer lies in incrementality testing, a methodical way to measure the real value generated by your marketing efforts.

By isolating the effects of your campaigns through carefully designed experiments, incrementality testing helps you find out what’s actually moving the needle, versus what’s going to happen anyway. In this guide, we’ll define incrementality testing and explain why it matters, then walk through how to conduct a test, calculate lift, and apply this approach in today’s privacy first world.

What Is Incrementality Testing (& Why Does It Matter)?

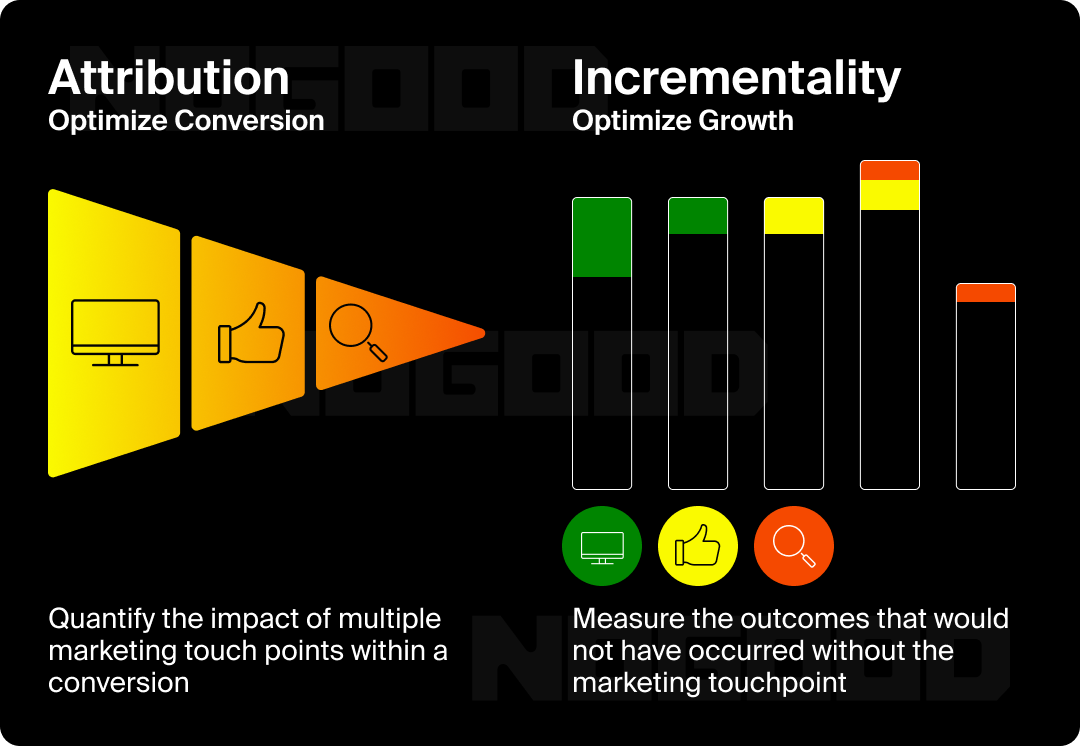

Incrementality testing is an experimental approach to marketing measurement that quantifies the true incremental impact of a campaign or channel. In simple terms, it answers: “How many conversions or how much revenue did this marketing campaign produce that would not have occurred otherwise?” Unlike basic analytics that report raw numbers or attribution that assigns credit, incrementality testing focuses on causation and separates the real signal from the noise.

Why is this important? You might be spending money on customers who would have converted even without your ads (and the ad spend behind them). Incrementality testing reveals the truth by comparing results between people who see your marketing and a similar group who don’t. The difference in outcomes is the extra value attributable solely to your campaign.

This clarity is vital for optimizing budget allocation and proving return on investment (ROI). After all, if a campaign isn’t truly incremental, its budget—your organization’s dollars—could be better spent elsewhere.

Beyond proving ROI, incrementality testing builds confidence in your strategy. When you can point to hard evidence of incremental conversions, you’re better equipped to justify your marketing spend to stakeholders.

It also encourages a culture of testing, by pushing your team to move beyond assumptions and gut feelings to evidence based decisions. In short, incrementality testing is about understanding cause and effect in marketing, so you can invest in what truly works and cut what doesn’t.

Incrementality Testing vs. A/B Testing

Incrementality testing is different from classic A/B testing. In an A/B test, you compare two variations of a campaign element. It can be two different ad creatives or landing pages to see which performs better. It’s about optimization: A/B tests help you pick the better option within a campaign.

Incrementality testing, in contrast, compares running the campaign versus not running it at all. Instead of two variations, you split your audience into a test group and a control group. The question is not “Which version works better?” but “Does this tactic work at all beyond what would happen naturally?”.

Both approaches have their place—use incrementality tests to validate if a marketing strategy is worth pursuing in the first place, and use A/B tests to fine-tune the execution once you know it’s worthwhile.

Incrementality Testing vs. Attribution Modeling

Attribution modeling assigns credit for conversions to different touchpoints (e.g. which channel or ad “caused” a sale). Models like last-click or multi-touch help map the customer journey, but they don’t prove causality. Just because someone clicked an ad before purchasing doesn’t mean the ad caused the purchase.

Incrementality testing isolates causality by using a control group. For example, attribution might credit 100 sales to a Facebook campaign, but an incrementality test could reveal that 80 of those sales would have happened anyway without the ads, which means that only 20 were truly incremental. This experiment-driven insight prevents overestimating a channel’s value and can save you from pouring budget into tactics that aren’t actually driving new results.

Think of attribution as accounting for conversions, while incrementality testing is proving conversions. Attribution models provide a modeled estimate of each channel’s role, whereas incrementality testing provides hard evidence through experimentation.

How Does Incrementality Testing Work?

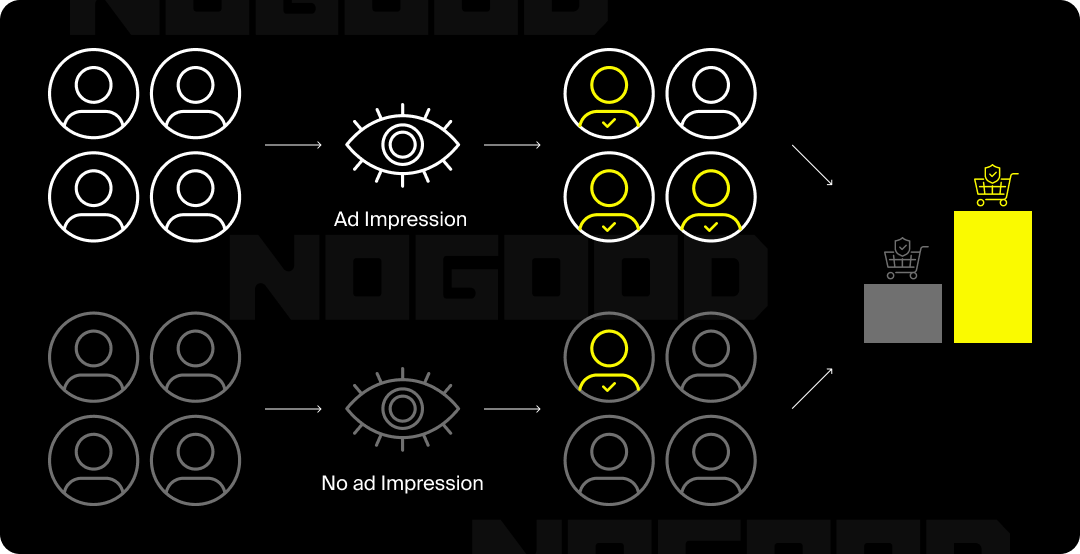

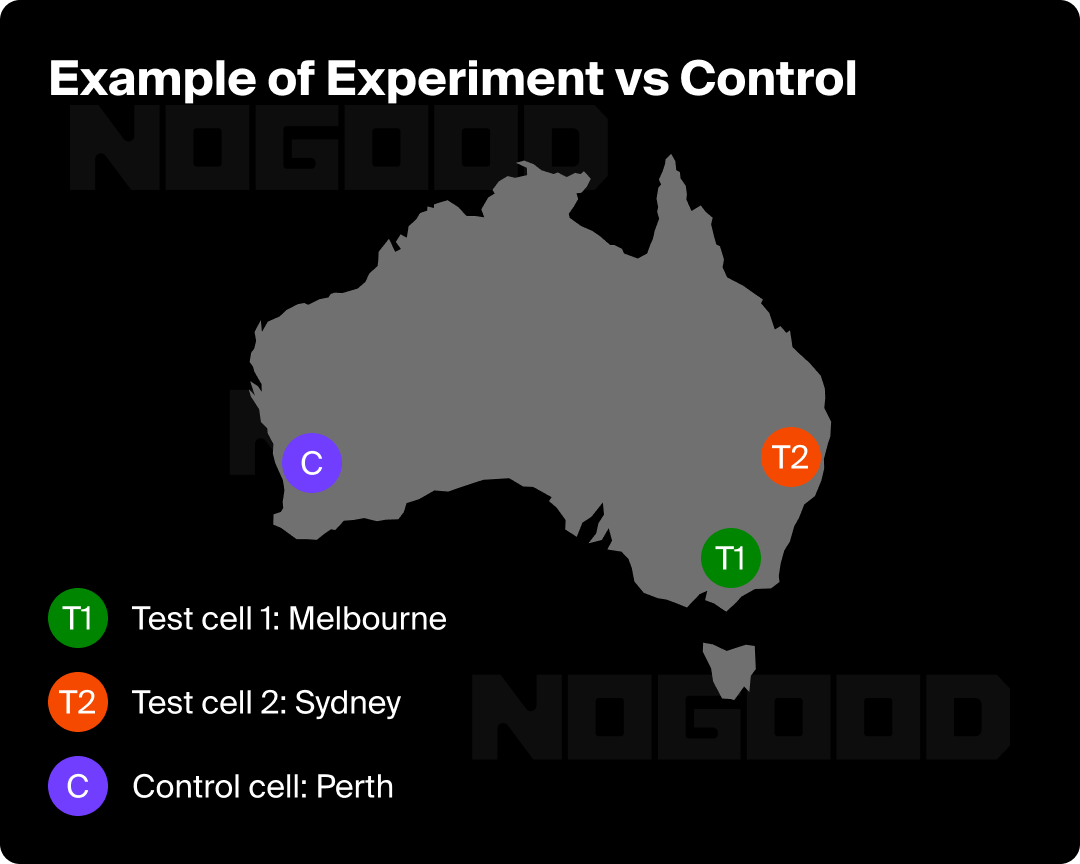

At its core, an incrementality test splits your audience into two groups: a test group that is exposed to the marketing campaign, and a control group that is not. This split should be as random and even as possible, so the two groups are comparable.

You can implement this in different ways. For example, you might hold out 10% of your user base as a control, or run a geo-based test where certain regions or markets serve as controls by pausing the campaign there. The key is that the only meaningful difference between the groups is whether they saw the marketing or not.

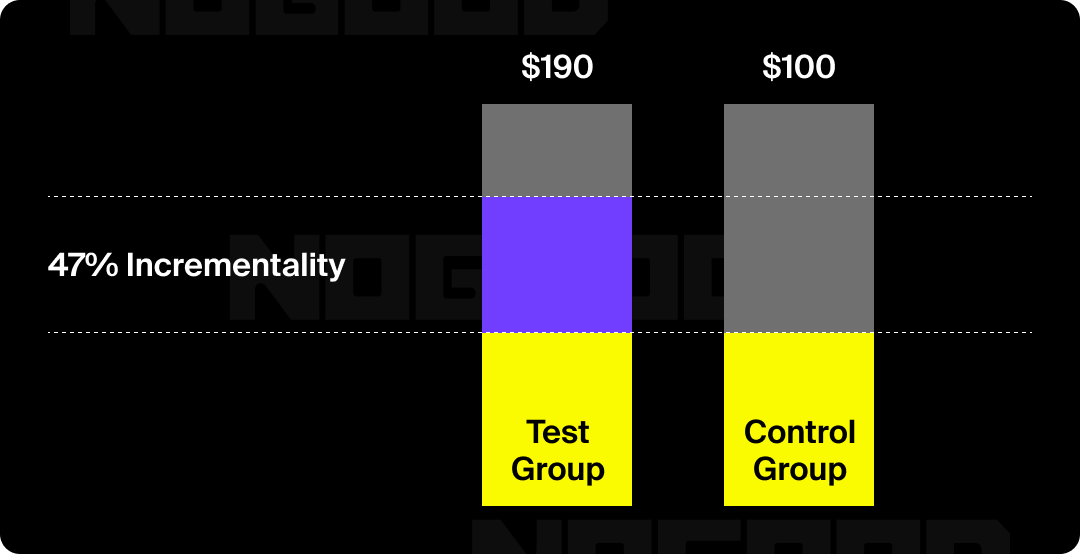

Once the campaign is running, monitor key metrics across each group—whether that be conversions, click-through rates, sales revenue, or any KPI you care about. After the test period, compare the outcomes. The control group’s results show you the baseline, and the test group’s results show the baseline plus the campaign’s impact. If the test group outperforms the control group, the gap between them represents the campaign’s incremental effect.

How to Calculate Incremental Lift

To quantify the impact of incrementality testing, you’ll calculate the lift achieved by the campaign. This can be expressed in absolute terms or percentage terms:

- If 5% of the test group converted versus 4% of the control group, that 1% difference (a lift of 1 percentage point) is equivalent to a 25% relative lift over the control’s conversion rate.

- Let’s say the test group had 5,000 conversions and the control had 4,000—that’s 1,000 extra conversions attributable to the campaign (a 25% lift).

You can also measure incremental revenue the same way.

- A positive lift confirms the campaign had a real impact.

- A near-zero lift means it made no meaningful difference.

- A negative lift (with control outperforming test) indicates the campaign had no impact or even a negative effect.

It’s crucial to use statistical significance testing to confirm that any observed lift is real and not just random chance. With a large enough sample and a clear difference between test and control, you can be confident in the result.

Incrementality in a Privacy-First World

In today’s privacy-first environment, incrementality testing has become even more critical. With third party cookies on the way out and regulations like GDPR and CCPA limiting user-level tracking, marketers have less data to directly attribute conversions to ads. Traditional multi-touch attribution is getting harder as more users opt out of tracking.

Incrementality testing offers a solution that respects privacy because it doesn’t rely on following individuals at all—rather, it looks at aggregated groups and outcomes.

By measuring group lift rather than individual paths, incrementality experiments can fill in insight gaps when tracking is limited. Even if you can’t see every step a customer took, you can still ask, “Did the group exposed to ads perform better than the group that wasn’t?”.

It also complements broader measurement approaches like marketing mix modeling (MMM). While MMM estimates channel impact using historical data, incrementality experiments provide direct causal validation to confirm those model-driven insights. This makes incrementality testing a powerful tool in the era of restricted data. It provides dependable evidence of marketing impact without violating privacy, allowing you to continue optimizing your spend in a landscape where raw data is scarcer.

Best Practices for Incrementality Testing

- Define Clear Goals & KPIs: Set a specific hypothesis and success metric. Knowing exactly what question you’re trying to answer will guide the test design.

- Ensure Proper Randomization: Assign users or regions to test and control groups randomly to make them as similar as possible. This avoids bias and makes your results credible.

- Control External Factors: Try not to run tests during major events or seasonality spikes that could skew results—if you must, ensure both test and control groups are equally exposed to them.

- Give It Enough Time: Run the test for long enough to collect sufficient data. Too short and you might miss the effect—too long and external changes might creep in. Plan a duration that balances these considerations.

- Iterate & Learn: Treat incrementality testing as an ongoing process. Use insights from one test to refine your campaigns and run follow-up experiments. Continuous testing means you’re always validating and improving your marketing strategy.

- Leverage Platform Tools: Many ad platforms (Facebook, Google, etc.) offer built-in lift test or experimentation features. These can simplify the setup and analysis of incrementality tests, so make use of them when available.

Common Challenges & Things to Watch Out For

- Sample Size Matters: If your test groups are too small, you might not detect a lift—even if one exists. Always check that you have enough sample size to achieve statistical significance.

- Audience Overlap: Make sure your control group truly isn’t exposed to the campaign. Any “leakage” will dilute the differences and muddy your results.

- External Noise: Be mindful of factors like seasonality or competitor campaigns that could impact performance. These can affect test and control groups; try to account for them or acknowledge them when analyzing results.

- Technical Complexity: Setting up incrementality experiments can be technical. You may need coordination between marketing platforms, analytics tools, or help from data analysts. Start simple and scale up as you learn.

- Short-Term Sacrifice for Long-Term Gain: Holding out a control group means intentionally not marketing to some potential customers during the test. It may feel risky, but it’s necessary for clean results. The payoff? Confidence that your full-budget campaigns are truly worth the spend.

Final Thoughts

Incrementality testing offers a clear answer to the big question: “Is our marketing truly making a difference?”. By scientifically isolating the impact of your campaigns, it provides insight that standard reports and attribution models alone can’t match.

In an era of tight budgets and strict privacy standards, this approach has gone from “nice to have” to “must have”. Marketers who embrace incrementality testing can make data-backed decisions with confidence, optimize their spend for maximum impact, and prove the true value of their work.

Ultimately, incrementality testing ensures that every marketing move is grounded in evidence.