Imagine your website as a library, with your homepage as the entrance.

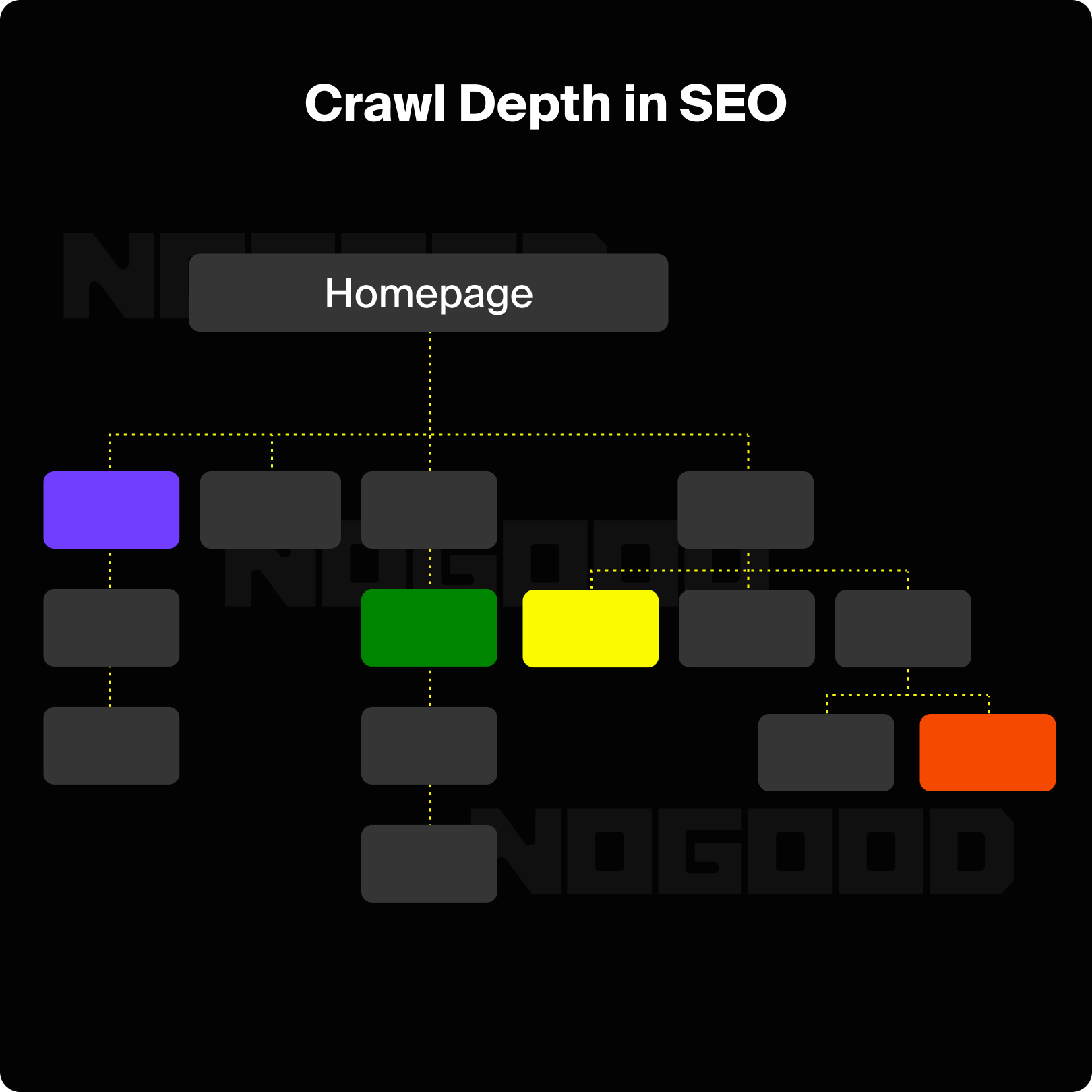

Crawl depth measures how many “aisles” or clicks a visitor (or search engine bot) has to navigate from the entrance to find a specific book (webpage).

In simple terms, it’s the number of clicks needed to reach any given page from the homepage.

Pages with a shallower crawl depth (closer to the homepage) are easier to access and more likely to be crawled and indexed frequently by search engine bots.

This increased visibility from search engine crawlers can lead to better search rankings and more organic traffic.

In this article, we’ll cover four SEO strategies for optimizing your website’s crawl depth and three common crawl depth issues and solutions.

Table of Contents

- Why Crawl Depth Matters for SEO

- 4 Strategies to Optimize Crawl Depth

- 3 Common Crawl Depth Issues and Solutions

- Monitoring and Maintaining Optimal Crawl Depth

How Google Indexes Content: Why Crawl Depth Matters for SEO

Crawl depth is important for SEO because it impacts how well search engine bots can crawl, understand, and index your website.

Google indexes content through a process that begins with crawling, where Googlebot discovers new or updated web pages by following links and sitemaps.

Once a page is crawled, it undergoes indexing, during which Google analyzes its content and metadata to determine its relevance. Pages that meet quality criteria are added to the index and, therefore, can rank in search results, while those with “noindex” tags or low-quality content may be excluded.

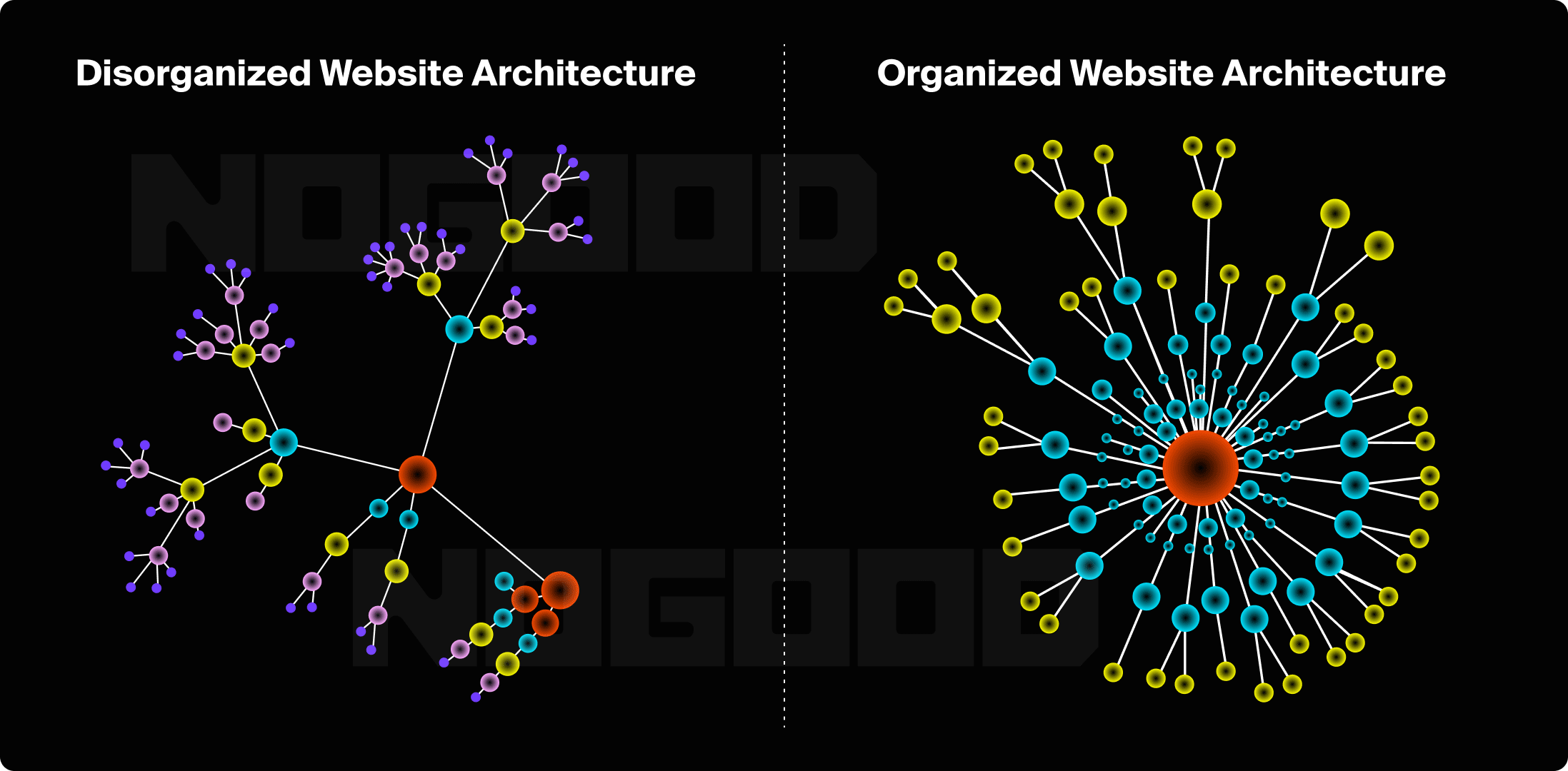

I recently ran a site audit and discovered that many blog posts on a website had become orphan pages, meaning they had no other internal pages linking to them. My site audit identified other pages that were up to seven clicks away from the homepage.

These pages were buried so deep in the website architecture that Google couldn’t find them.

A disorganized site structure like this means search engines waste crawl budget indexing unimportant pages and stop crawling your site before they reach valuable content.

Websites should have a shallow crawl depth because pages closer to the homepage are easier for Google to index and rank in SERPs.

Shallow pages also typically receive more internal and external links, translating to more authority for those pages.

In short, optimizing your website for crawl depth improves your page rankings and creates a better user experience.

Need to Optimize Your Website Architecture?

4 Strategies to Optimize Crawl Depth

1. Streamline Website Architecture

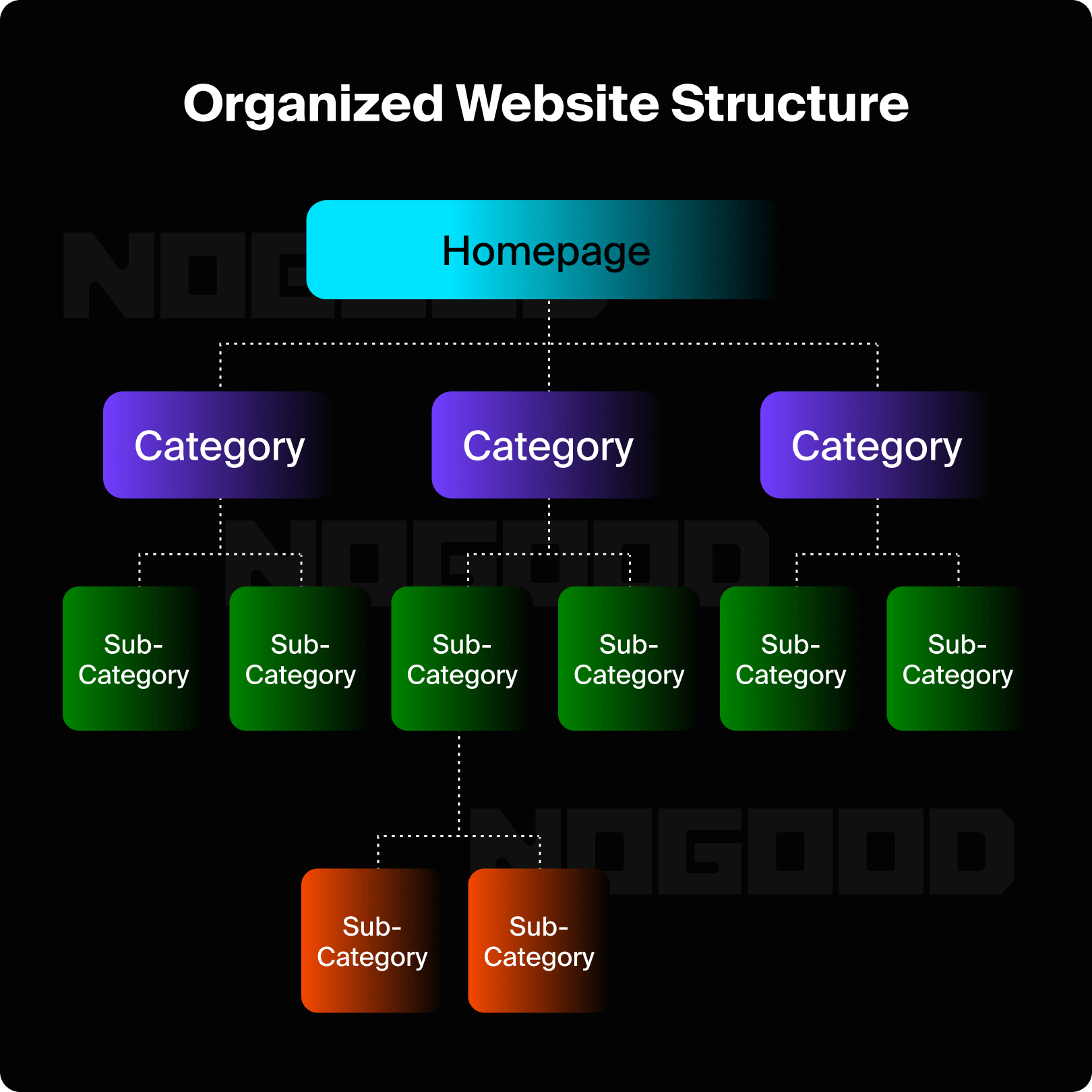

When optimizing your website layout, you should create a clear hierarchy with main categories, subcategories, and individual pages.

Aim for a horizontal website structure with no more than 3-4 levels.

Logical Website Hierarchy

Homepage > Main Categories > Subcategories > Individual Pages

A logical website hierarchy makes it easier for both users and search engines to navigate and discover content.

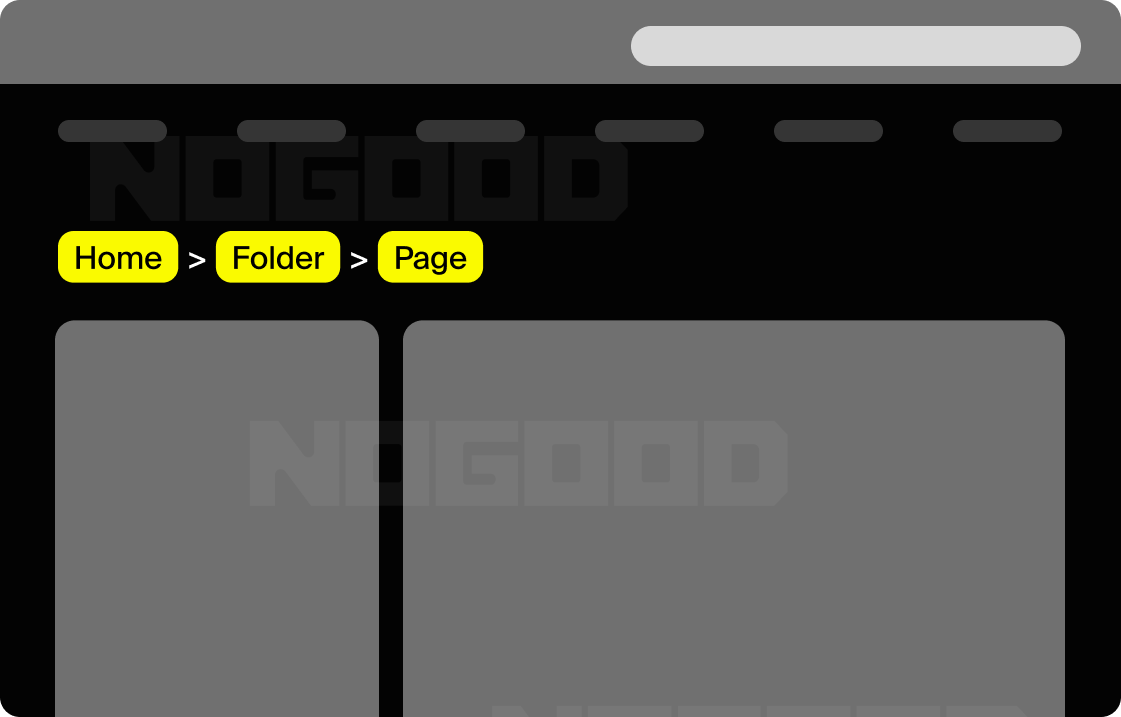

To further reinforce logical hierarchy, you can use breadcrumb navigation.

Breadcrumbs help users and crawlers understand page relationships by showing a trail from one page to another.

Implementing this navigation will map out the user’s path, helping them interact with your site more easily.

Want to Learn More About How to Optimize Your Website Architecture?

2. Improve Internal Linking

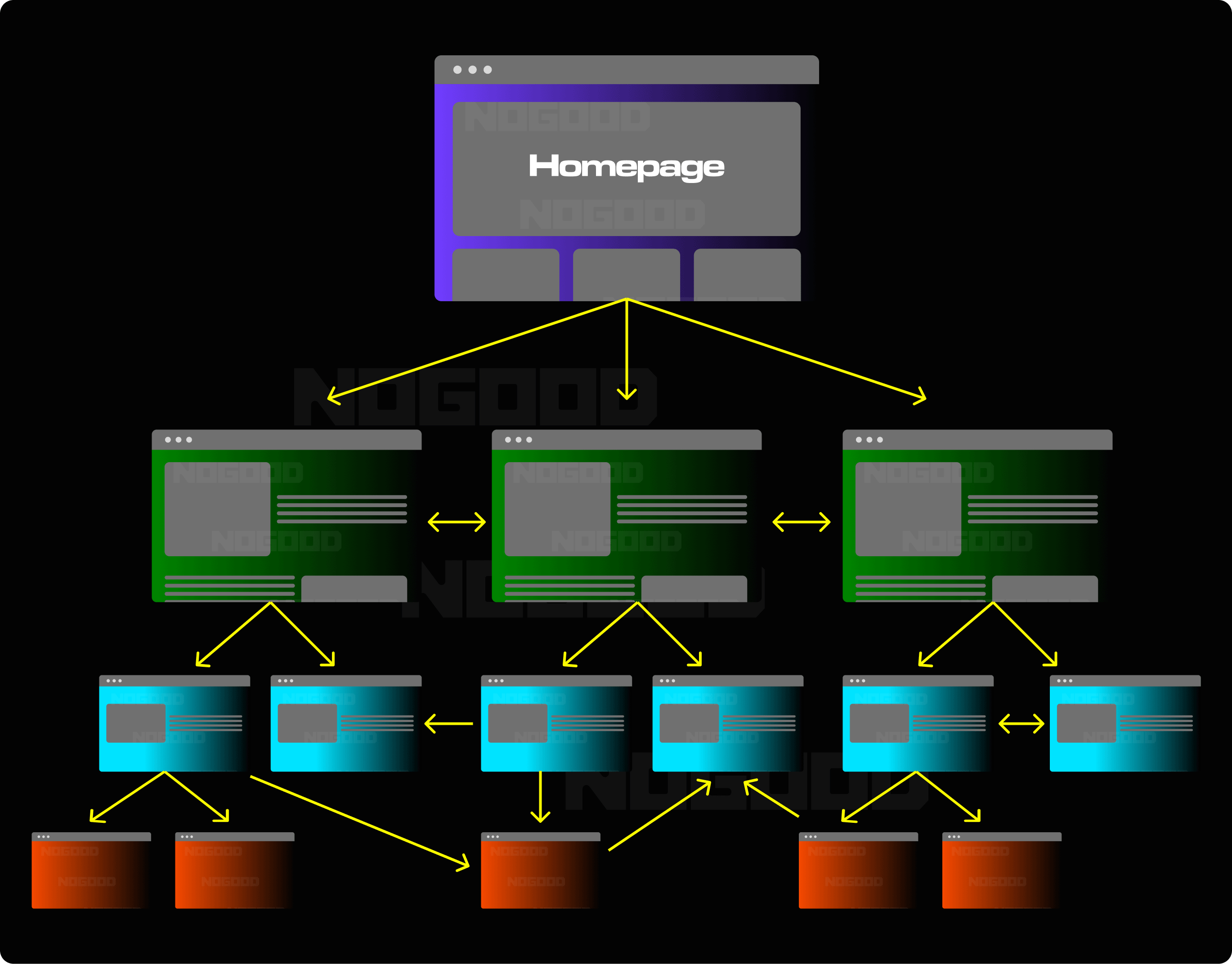

Internal links create a path for both users and search engines to navigate your website.

When a search engine crawls your page and discovers a link, it will follow it and begin to crawl that page. As the search engine crawler continues down your internal linking path, it will discover more valuable content that can be ranked in SERPs.

The more internal linking paths you create, the more your content is discovered and ranked in SERPs.

Each page on your website should link strategically from high-authority pages like the homepage to important, deeper pages, like product, service, or blog pages. Include descriptive anchor text with relevant keywords for each link to provide context for the contents of the page you’re to.

A great internal linking strategy is to include links to less popular pages in your top-performing pages. This will help balance your link equity and ensure it is equally distributed across your website.

3. Optimize XML Sitemaps

As you optimize and update the content on your website, you will need to update your sitemap.

If you regularly update your website, you should submit a new XML sitemap to Google once a month.

Your sitemap will act as a roadmap for your website, helping search engines understand the full scope of your content.

4. Enhance Page Speed

Search engines only dedicate a limited amount of time to crawling a given website, this is referred to as crawl budget.

Fast page speed allows search engines to crawl your website quickly, meaning more of your website is discovered in a single crawl.

There are many factors that reduce page performance such as large files, bandwidth, server response times, caching and bloated code.

3 Ways to Optimize Page Speed:

- Optimize Images: Make sure your image dimensions match the size the browser displays. You can add an image inspection tool to your browser to view the properties of your image. If the image is too large, the browser must shrink it to fit the page before loading, decreasing the domain response speed. Optimizing the size of your images will also improve your core web vitals score.

- Use Browser Caching: Work with a developer or install a plugin on your CMS to cache static files on your website that aren’t often updated. This allows the browser to store the information so it does not need to be loaded whenever someone clicks on your site.

- Limit External Scripts: External scripts are page elements loaded from an external source. They can be ads, CTA buttons, CMS plugins, or pop-ups. The browser must load external scripts each time the page loads, so it’s a good idea to limit the number you use.

3 Common Crawl Depth Issues and Solutions

1. Excessive Crawl Depth

Excessive crawl depth typically occurs when important pages are more than four clicks away from the homepage. This can lead to several issues such as poor user experience, reduced crawl frequency, and decreased link equity.

How to Fix it:

- Make sure each page on your website falls within a category or subcategory

- Create content hubs or pillar pages that include links to related content

- Implement a “related articles” section on blog posts

- Optimize your navigation menus to ensure all categories are clearly defined

2. Orphaned Pages

Orphaned pages do not have any internal pages linking to or from it. This means they are difficult for search engines to find and can’t be accessed through normal navigation.

How to Fix it:

- Identify orphaned pages by running a site audit or using a crawler like Screaming Frog

- Implement internal links to high authority pages like category pages

- Consolidate similar pages and implement redirects from the orphaned URL

- Set up redirects for outdated or unnecessary pages

3. Broken Links and Redirects

A broken link (also called a dead link) is a hyperlink that points to a non-existent page or resource. When a user clicks on a broken link, they typically see a 404 error page.

How to Fix it:

- Conduct a site audit to identify broken links

- Implement a redirect to guide users to the correct page

- Update the URL if it is incorrect, or remove it from your site if it’s not needed

- For external broken links, replace the link with a similar source

Optimize Crawl Depth with Ongoing Maintenance

Managing crawl depth is an ongoing process. You should conduct regular site audits to identify crawl depth issues and correct them to ensure your content is discoverable by search engines. It’s important to regularly adapt your website structure and internal linking strategy as your website and business continue to grow.

As you add more content and categories to your website, continue to implement these practices to keep your site in good health. If you need help optimizing your website for crawl depth, our SEO growth experts can help. Talk to us.