In the vast landscape of the digital world, search engines serve as the navigational compass guiding us through the labyrinth of online content. Among the multitude of search engines, one stands tall as the dominant force, shaping the very foundations of our online experiences – Google. With billions of searches conducted daily, understanding the intricate workings of these digital gatekeepers has become paramount for anyone seeking to thrive in the digital realm.

This article delves deep into the heart of search engines, with Google as our primary focus, owing to its towering presence in the online arena. As we navigate the SEO terrain in 2024, we’ll uncover the dozens of factors that influence search engine rankings, explore the technical optimization that underpins these digital giants, and decipher the ever-evolving algorithms that drive our quest for relevant, high-quality information.

In this article, our emphasis will be squarely on Google as the primary search engine of focus. The rationale behind this choice lies in Google’s commanding presence within the search engine landscape. With a staggering 91.43% share of the search engine market, Google is the preeminent platform for online searches. Its widespread adoption means that it has the potential to direct a significantly larger volume of traffic compared to other search engines. In essence, Google’s prominence as the preferred search engine for most users makes it the natural centerpiece of our discussion.

Join us on this journey as we unravel the intricacies of search engines, shedding light on their role in realizing business objectives and propelling business growth. Along the way, we’ll delve into algorithm updates and marketing strategies that can propel your online content to new heights in the digital domain.

Part 1: Search engine basics: What it is & how it works

Part 2: How do search engines rank pages in 2024?

Part 3: Diving deeper into search engines: Crawling & indexing

Part 1: Search engine basics: What it is & how it works

What are search engine algorithms and their goals?

Google’s search engine algorithms are complex rules and processes determining how it ranks and displays web pages in its search results. These algorithms are designed to help users find the most relevant and high-quality information in response to their search queries. Google uses various algorithms to accomplish this goal, with the most famous one being the PageRank algorithm, named after the term “web page” and co-founder of Google, Larry Page.

The primary goals of Google’s search engine algorithms are as follows:

- Relevance: Google aims to provide search results relevant to the user’s query. The algorithms assess web pages based on keyword usage, content quality, and user engagement to determine which pages will most likely answer the user’s questions or meet their needs.

- Quality: Google strongly emphasizes the quality of the content it displays in search results. High-quality content is more likely to rank well in Google’s search results, while low-quality or spammy content is penalized. Google uses algorithms like Panda and Penguin to identify and filter out low-quality content and spam.

- Authority: Google values websites that are authoritative and trustworthy. Websites with a strong online reputation, backlinks from reputable sources, and high-quality content are more likely to rank well. Google’s algorithms assess the authority of a website to ensure that users are directed to reliable sources of information.

- User experience: Google wants a positive user experience, so its algorithms consider page load speed and mobile-friendliness. Websites that offer a better user experience are more likely to rank higher in search results.

- Freshness: Google aims to provide up-to-date information to users. Some search queries require the most recent information, so Google uses algorithms like QDF (Query Deserves Freshness) to prioritize fresh content for certain queries.

- Personalization: Google also considers the user’s search history and preferences to personalize search results. This helps ensure that users receive results relevant to their interests and needs.

- Diversity: Google’s algorithms strive to provide a diverse range of results. They aim to avoid showing multiple pages from the same website or similar content in the top search results to provide users with various perspectives and sources.

Google’s search engine algorithms are designed to deliver relevant, high-quality, and authoritative content to users while prioritizing a positive user experience and personalization. These algorithms undergo continuous updates and improvements to adapt to changing user behavior and the evolving nature of the web.

How does a search engine work?

Google’s search engine operates in three fundamental stages: crawling to discover web content, indexing to understand and categorize that content, and serving search results based on complex algorithms prioritizing relevance and quality. Let’s explore this more in-depth:

- Crawling: Google’s search process begins with automated programs known as crawlers. These crawlers scour the internet, following links from known web pages to discover new or updated content. These pages’ web addresses or URLs are then added to a vast list for later examination.

- Indexing: After identifying new web pages through crawling, Google analyzes the content, including text, images, and video files, to understand the subject matter of each page. This analysis helps Google create a comprehensive database known as the Google index. This index is stored across numerous computers and serves as a reference for search queries.

- Serving search results: When a user enters a search query, Google’s algorithms come into play to determine the most relevant results. Several factors influence this process, including the user’s location, language, device type (desktop or mobile), and previous search history. Google does not accept payments to rank pages higher in search results; instead, ranking is based on algorithmic calculations to deliver the most useful and appropriate results for the user’s query.

How does Google decide what search results you really want?

By tailoring them to your preferences and needs, Google determines what search results you’re likely to want. Here’s how Google accomplishes this personalization, based on the provided information and additional context:

- Location: Google uses your current location or the location settings on your device to customize search results. This is especially relevant for searches with local intent. For example, if you search for “hardware store,” Google will display results in or around your current location. It understands that you’re probably not interested in hardware stores halfway around the world and strives to provide relevant local options.

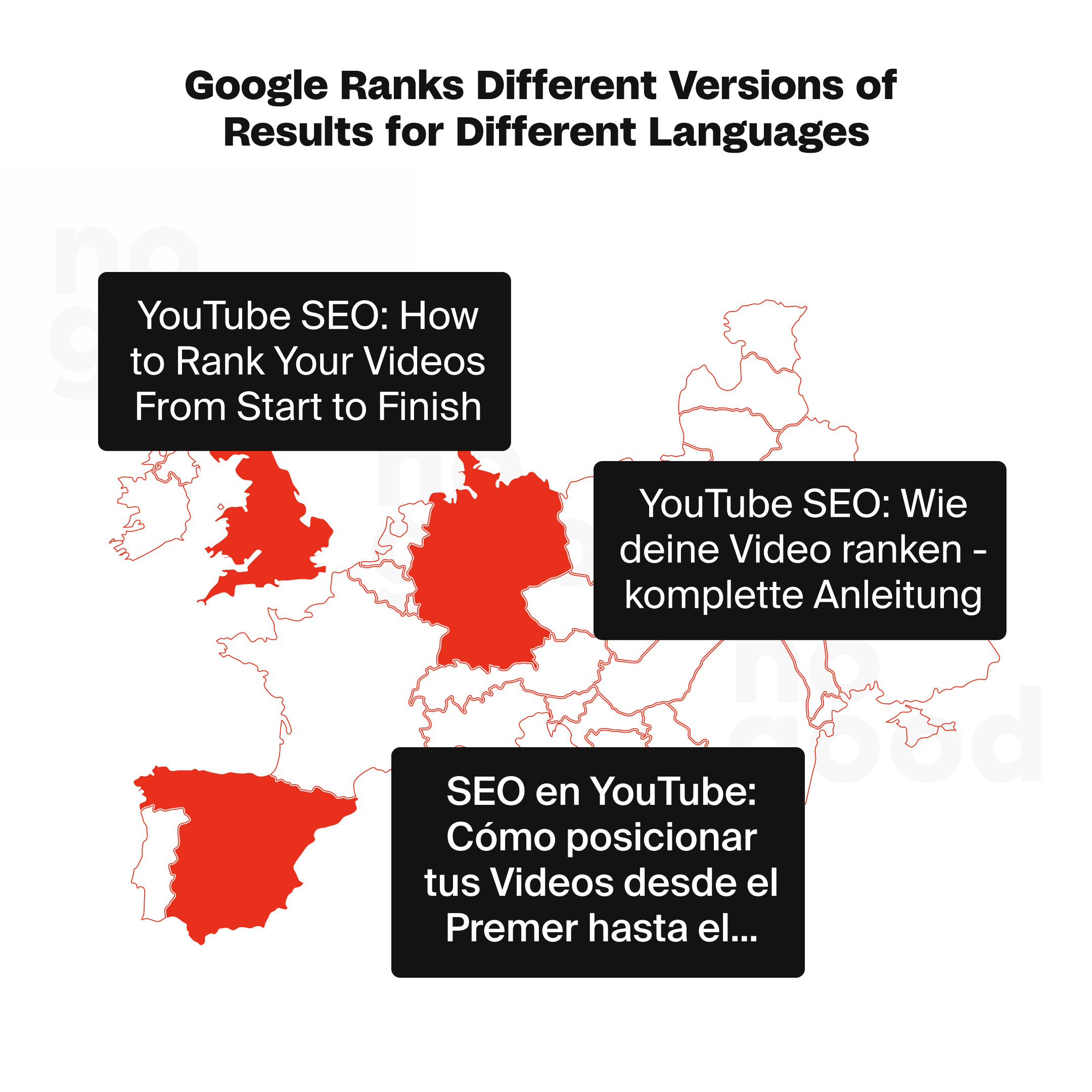

- Language: Google recognizes the language you use for your search queries and adjusts the results accordingly. It prioritizes displaying content in the language you prefer. For instance, if you’re a French-speaking user, Google will present search results primarily in French rather than English or other languages. It also ranks localized versions of content higher if available to ensure that users who speak different languages get the most relevant results.

- Search history: Google keeps track of your search history and online activities. This includes the websites you visit, the searches you perform, and the places you go. By doing so, Google aims to provide a more personalized search experience. It uses your search history to understand your interests, preferences, and the topics that matter to you. This information helps Google fine-tune its search results to align with your needs and tastes.

It’s important to note that while Google’s personalized search can be highly beneficial for users, it also raises privacy considerations. Google allows users to opt out of personalized search and data collection, although most users may choose to allow it to benefit from more customized and relevant search results.

In essence, Google’s ability to deliver search results you’re likely to want is achieved by leveraging location, language, and search history data to create a personalized and tailored search experience, making it more likely that the results you see align with your specific interests and context.

How do search engines generate revenue?

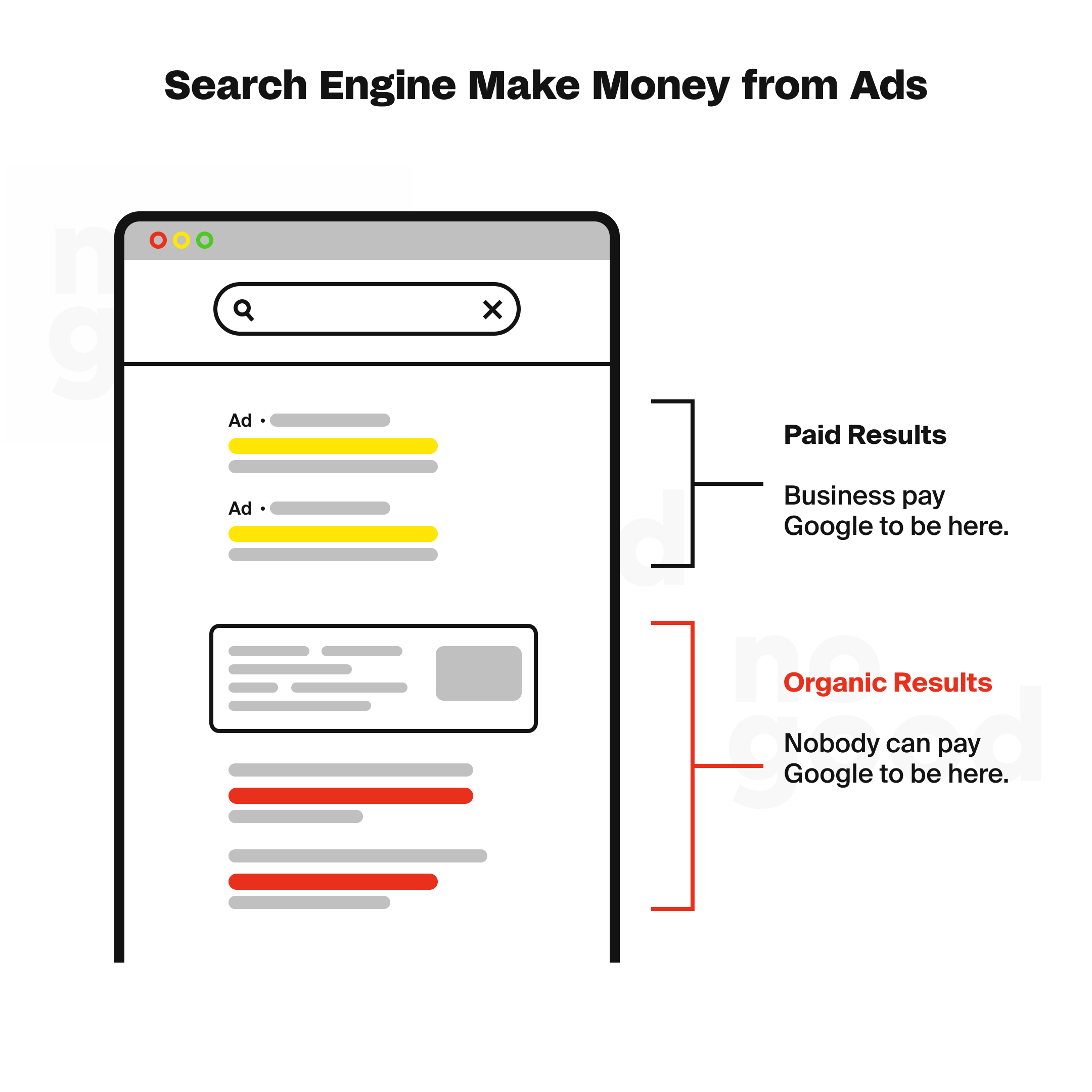

Search engines have two types of search results: organic search results and paid advertising. Here’s a breakdown of how they generate income:

- Organic results: These are the natural search results from the search engine’s index. Websites are ranked based on relevance to the user’s query and the search engine’s algorithms. Website owners cannot directly pay to have their pages appear in organic search results. Search engines like Google generate revenue from organic results indirectly, as the quality of organic search results encourages users to continue using their search engine. This, in turn, attracts more users and advertisers, which contributes to the search engine’s profitability.

- Paid results: Paid results are generated from advertisers who pay the search engine to display their ads alongside organic search results. Advertisers participate in pay-per-click (PPC) advertising campaigns, bidding on specific keywords or phrases relevant to their products or services. When a user clicks on one of these paid ads, the search engine charges the advertiser a fee. The amount of the fee depends on the competitiveness of the keyword and the bid made by the advertiser. This model is highly profitable for search engines because they earn revenue each time a user clicks on an ad.

- Auction model: Search engines often use an auction system to determine which ads appear in search results. Advertisers compete for ad placements based on keyword bids and quality scores (a measure of the ad’s relevance and quality). The ad with the highest bid and best quality score typically gets the top placement.

Part 2: How do search engines rank pages in 2024?

In 2024, popular search engines, including Google, use complex algorithms to rank pages based on various factors. These factors determine the relevance and quality of web pages in response to a user query. While not all search engine ranking factors are disclosed, several key ones are known to influence how search engines rank pages:

- Relevance: Relevance refers to how useful a search result is to the searcher. Google assesses relevance by looking at various factors. At a basic level, it examines whether a page contains the same keywords as the search query. Additionally, Google analyzes user interaction data to determine if searchers find the result useful. Pages that align well with user intent and deliver relevant content tend to rank higher.

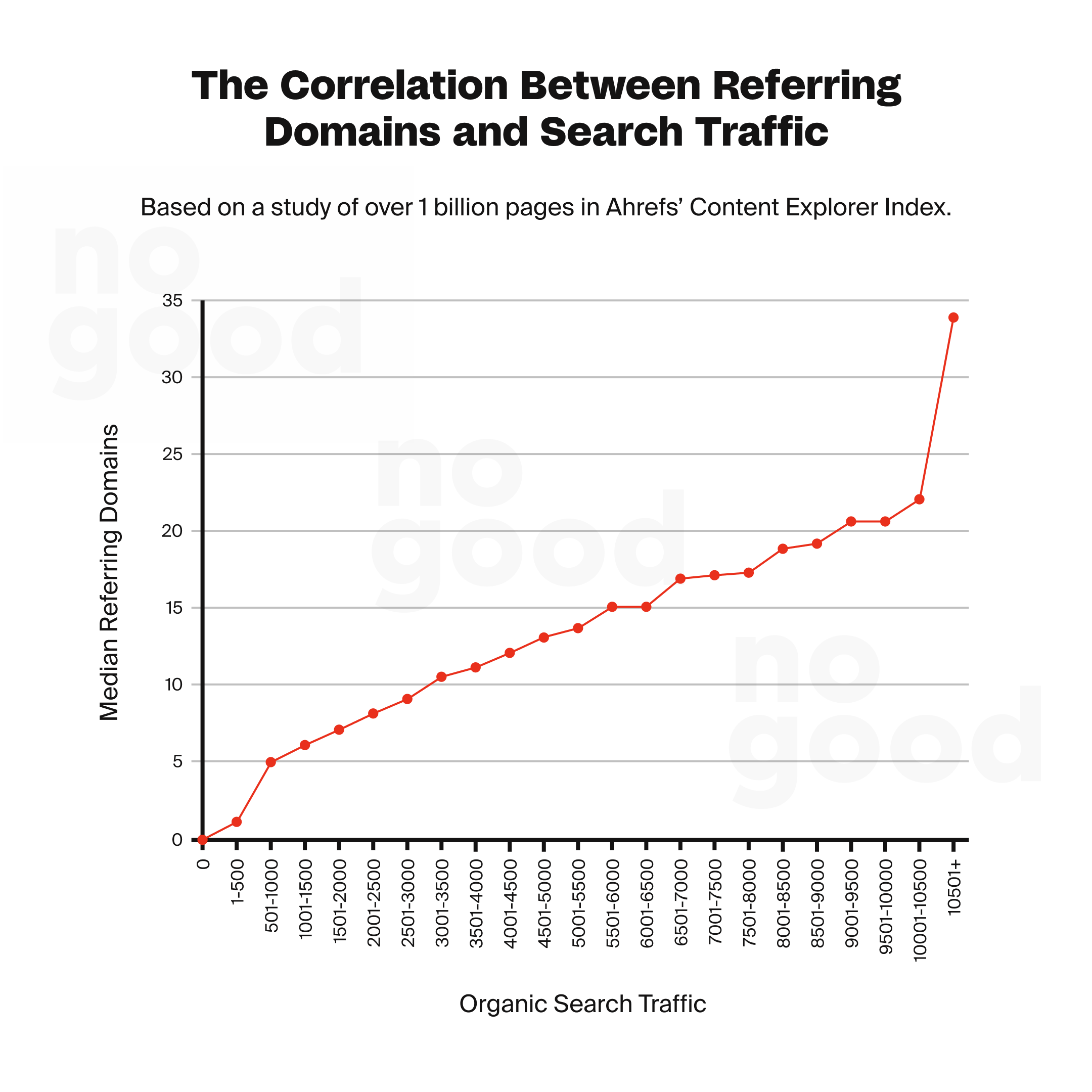

- Backlinks: Backlinks are links from one webpage to another. They are considered one of Google’s strongest ranking factors. High-quality backlinks from reputable and authoritative websites can significantly improve a page’s ranking. Quantity is important, but quality matters even more. Pages with a few high-quality backlinks often outrank those with many low-quality backlinks.

- Freshness: Freshness is a query-dependent ranking factor. It is more prominent in searches that require up-to-date information. For instance, queries like “latest international news” prioritize recently published content. Freshness helps ensure that users get the latest and most relevant information for their queries.

- Page speed: Page speed is a ranking factor for both desktop and mobile searches. However, it’s primarily a negative ranking factor, impacting slower-loading pages more than faster ones. Faster page loading times improve user experience, which is a key consideration for search engines.

- Mobile-friendliness: Mobile-friendliness became a critical ranking factor, especially since Google’s shift to mobile-first indexing in 2019. Search engines prioritize websites that provide a smooth and responsive experience on mobile devices as mobile searches continue to rise.

It’s worth noting that search engines combine these and many other factors to determine the order in which pages appear in search results. Additionally, search algorithms are continually evolving to provide users with more accurate and relevant results.

While the specific details of search algorithms are closely guarded, understanding these key factors can help website owners and marketers optimize their content and website performance to improve their rankings in search engine results pages.

Part 3: Diving deeper into search engines: Crawling & indexing

1. How do search engines go about crawling websites?

Search engines employ a multi-step process to crawl websites, discover web pages, and determine which pages to include in their index. Here’s how search engines, like Google, go about crawling websites:

- URL discovery: The initial phase involves finding out which web pages exist on the internet. Unlike a central registry of all web pages, search engines must constantly search for new and updated pages to maintain their list of known pages. This process is known as “URL discovery.” There are several methods for URL discovery:

- Prior visits: Some pages are already known to the search engine because they have been previously visited and indexed.

- Following links: Search engines discover new pages by following links from known pages. For instance, if a category page on a website links to a new blog post, the search engine will find the post by following the link.

- Sitemaps: Website owners can submit a list of pages (known as a sitemap) to search engines, which helps the search engine identify the pages to crawl.

- Prior visits: Some pages are already known to the search engine because they have been previously visited and indexed.

- Crawling: Once a search engine discovers a page’s URL, it may visit and “crawl” the page to gather information about its content. Search engines use a network of computers to crawl billions of web pages. The crawling process is performed by a program called a “crawler,” “Googlebot,” or “spider.” Googlebot uses an algorithmic process to decide which websites to crawl, the frequency of crawling, and the number of pages to fetch from each site.

- Crawl rate: Google’s crawlers are programmed to avoid overloading websites. The crawl rate depends on various factors, including the site’s responsiveness and the settings specified in Google Search Console.

- Exclusion: Not all pages discovered are crawled. Some pages may be excluded from crawling by the site owner, while others may not be accessible without logging in.

- Crawl rate: Google’s crawlers are programmed to avoid overloading websites. The crawl rate depends on various factors, including the site’s responsiveness and the settings specified in Google Search Console.

- Rendering: During the crawling process, Google renders the page and executes any JavaScript it encounters. This rendering process is akin to how a web browser displays web pages when you visit them. Rendering is crucial because many websites rely on JavaScript to load content. Without rendering, Google might not see certain content on the page.

- Access issues: Crawling depends on whether Google’s crawlers can access the website. Common issues that can hinder Googlebot’s access to sites include:

- Server problems: If the web server handling the site experiences issues or is slow to respond, it can affect crawling.

- Network issues: Connectivity problems or network issues can also impede Googlebot’s ability to crawl a website.

- Robots.txt rules: Website owners can specify rules in a “robots.txt” file that instruct search engines on which parts of the site to crawl and which to exclude. If a page is disallowed in the robots.txt file, Googlebot won’t crawl it.

- Server problems: If the web server handling the site experiences issues or is slow to respond, it can affect crawling.

In essence, search engines like Google use a combination of automated crawling and discovery mechanisms to compile a comprehensive index of web pages. The process is based on algorithms, access permissions, and adherence to guidelines set by website owners, ensuring that search engines can provide accurate and up-to-date search results to users.

Telling search engines how to crawl your site

To instruct search engines on how to crawl your site and have better control over what gets indexed, you can utilize the “robots.txt” file. This file, located in the root directory of your website (e.g., yourdomain.com/robots.txt), provides directives to search engines regarding which parts of your site should be crawled and which should be avoided.

What is Robots.txt, and how do search engines utilize it?

Robots.txt is a simple text file on a website that tells search engines which parts of the site they are allowed to crawl and index and which parts they should avoid. Search engines, like Google, use the information in the robots.txt file to determine which web pages to include in their search results and which to exclude. It’s a way for website owners to control how search engine crawlers interact with their site, helping them manage what appears in search results.

Here’s how to use the “robots.txt” file to guide search engine crawlers:

- Create or edit the Robots.txt file: To get started, you need to create or edit the “robots.txt” file for your website. This can typically be done using a text editor, and the file should be placed in your website’s root directory.

- Specify user-agent: In the “robots.txt” file, you can specify which search engine user-agents your directives apply to. For example, you can target Googlebot specifically or use wildcard symbols like ‘*’ to address all search engine crawlers.

- Set disallow directives: To prevent search engines from crawling specific directories or pages on your site, use the “Disallow” directive followed by the path you want to block.

Remember that while robots.txt directives can guide search engine crawlers, they are not absolute rules. Some search engines may choose to follow these directives closely, while others may not. Additionally, be cautious when using the “Disallow” directive, as blocking essential pages can impact your site’s visibility in search results. Always test and validate your robots.txt file to strike the right balance between privacy and visibility for your web content.

What exactly is meant by “crawl budget,” and how do you optimize for it?

Crawl budget refers to the average number of web pages that a search engine crawler, such as Googlebot, will crawl on your website before moving on. Optimizing for crawl budget is essential because it ensures that search engine crawlers focus their efforts on your site’s most important and relevant pages rather than wasting time on unimportant pages.

Here’s how you can optimize for crawl budget:

- Prioritize important pages: Identify the critical pages on your website that you want search engines to crawl and index. These may include your homepage, product pages, blog posts, and other content that provides value to your users.

- Avoid crawling unimportant pages: Use the “robots.txt” file to disallow search engine crawlers from accessing and crawling unimportant or non-essential parts of your site. For example, you might block crawlers from indexing private login or administration pages.

- Use canonical and noindex tags: Implement canonical tags to specify the preferred version of duplicate content. Additionally, use the “noindex” meta tag to instruct search engines not to index pages you don’t want to appear in search results.

- Monitor crawl behavior: Regularly monitor your website’s crawl behavior using tools like Google Search Console. This allows you to see which pages are being crawled and indexed and which are not. You can identify and address any issues that may be impacting crawl efficiency.

- Improve site speed: Faster-loading pages are more likely to be crawled efficiently. Optimize your website’s speed and performance to ensure that search engine crawlers can access and crawl your pages quickly.

- Quality content: Focus on creating high-quality and valuable content. Search engines are more likely to allocate a crawl budget to sites that consistently provide relevant and engaging content.

- Mobile-friendly design: Ensure that your website is mobile-friendly. With Google’s mobile-first indexing, having a responsive design is crucial for crawl budget optimization.

- Sitemap optimization: Submit a sitemap of your website to search engines. A well-structured sitemap can help search engines understand the organization of your site and prioritize crawling.

- Regular updates: Keep your website updated and free from technical issues that might hinder crawl efficiency. Broken links or server errors can disrupt the crawling process.

- Security: Ensure that your website is secure and protected against malicious bots. While robots.txt can control legitimate search engine crawlers, it may not deter malicious bots. Implement security measures to safeguard sensitive information.

Optimizing for crawl budget involves managing how search engine crawlers interact with your website to ensure they focus on the most important pages. By prioritizing important content, disallowing access to unimportant areas, and using tags effectively, you can influence how search engines allocate their resources when crawling your site. Regular monitoring and maintenance are also essential for effective crawl budget optimization.

Setting URL parameters within Google Search Console (GSC)

The URL Parameters feature in GSC is a tool that allows website owners to provide instructions to Googlebot, the search engine crawler, on how to handle URLs with specific parameters. This feature is particularly useful for websites, especially e-commerce sites, that generate multiple versions of the same content by appending URL parameters.

Here’s how to set URL parameters within Google Search Console:

- Access GSC: Sign in to your Google Search Console account, and select the property (website) for which you want to manage URL parameters.

- Navigate to URL Parameters: In the left-hand menu, under the “Index” section, click on “URL Parameters.”

- Add a Parameter: To instruct Google on how to handle a specific parameter, click on the “Add Parameter” button.

- Specify Parameter details:

- Parameter: Enter the parameter name (e.g., category, color, sessionid) that appears in your URLs.

- Does this parameter change page content seen by the user?: Select “Yes” if the parameter causes a visible change in the page content. Select “No” if it doesn’t affect the visible content.

- How does this parameter affect page content?: Choose one of the options that best describes how the parameter impacts the page content. Options include “Narrows” (refines results), “Specifies” (defines a specific item), “Sorts” (arranges content), and more.

- Crawl: This setting allows you to specify how Googlebot should crawl pages with this parameter.

- URLs: Here, you can decide whether you want Google to crawl all URLs with this parameter, only the representative URL, or none at all.

- Save changes: After specifying the parameter details and crawl preferences, click the “Save” or “Add” button to save your settings.

- Monitor and adjust: Google Search Console provides insights into how Googlebot is handling URLs with parameters based on your instructions. Regularly monitor the data and adjust your settings if needed.

Are search engines able to find and crawl your important content?

Ensuring that search engines can find and crawl your important content is crucial for effective search engine optimization (SEO). Here are some key considerations and optimizations to help Googlebot discover your vital pages:

- Clear site navigation

- Ensure that your website has clear and accessible navigation. Crawlers rely on links to move from page to page. If a page you want to be indexed is not linked to from any other pages, it may go unnoticed by search engines.

- Mobile and desktop navigation

- Ensure that both mobile and desktop versions of your website have consistent navigation. Different navigational structures for mobile and desktop can confuse search engines and hinder indexing.

- Information architecture

- Organize and label your website’s content using intuitive information architecture. Well-structured websites are easier for both users and search engines to navigate. Ensure users don’t have to think too hard to find what they’re looking for.

- XML sitemaps

- Create an XML sitemap containing a list of URLs on your site. This sitemap helps search engines discover and index your content efficiently. Submit the sitemap through Google Search Console for better control.

- Crawl errors

- Regularly monitor crawl errors in Google Search Console’s “Crawl Errors” report. Address server errors (5xx codes) and “not found” errors (4xx codes) promptly. Use 301 redirects to guide users and search engines from old URLs to new ones.

- 301 redirects

- When you change the URL of a page, use 301 redirects to inform search engines that the page has permanently moved to a new location. This transfer of link equity helps search engines find and index the new version of the page.

- Minimize redirect chains, as too many redirects can make it challenging for Googlebot to reach your page. Redirects should be efficient and direct to avoid unnecessary delays.

Optimizing for crawlability and indexability ensures that search engines can access, understand, and rank your important content in search results. Regularly review your website’s structure, navigation, and technical aspects to maintain a healthy and efficient indexing process.

2. How do search engines develop their indexes

How does the process of search engine indexing work?

Every search engine follows a distinct procedure to construct its search index. Here is a simplified outline of the method employed by Google.

After a page is crawled, the search engine indexing process is a crucial step that follows the crawling of web pages. During indexing, search engines like Google aim to understand the content of a web page and determine its relevance to various search queries. Here’s how the indexing process works:

- Crawling precedes indexing: As discussed, before indexing, search engines crawl web pages to discover their existence and gather the content. Crawling is like visiting a webpage and downloading its data. Once a page is crawled, it’s ready for the indexing phase.

- Textual content analysis: During indexing, the search engine analyzes the web page’s textual content. This includes parsing and understanding the text within the page’s main content, headings, paragraphs, and other textual elements. Search engines use sophisticated algorithms to extract keywords and phrases that help identify the page’s topic and context.

- Key content tags and attributes: Search engines also analyze key content tags and attributes within the HTML of the page. This includes elements such as the <title> element, which provides a concise description of the page’s topic, and alt attributes associated with images, which provide alternative text descriptions for images. These tags and attributes provide additional context for indexing.

- Duplicate content handling: Search engines determine whether the page is a duplicate of another page on the internet or if it has canonical versions. Canonicalization is the process of selecting the most representative page from a group of similar pages. The canonical page is the one that may be displayed in search results, while others in the group are considered alternate versions. The choice of the canonical page depends on various factors, such as content quality, language, country, and usability.

- Sign collection: Google collects various signals about the canonical page and its content during indexing. These signals include information about the page’s language, geographic relevance, usability, and more. These signals may be used in the subsequent stage to generate search results for user queries.

- Storage in Google index: The information gathered about the canonical page, and its cluster (group of similar pages) may be stored in the Google index. The Google index is a massive database hosted on numerous computers. Indexing, however, is not guaranteed; not all pages crawled by Google will be indexed.

- Common indexing issues: Several factors can affect indexing, including:

- Low-quality content: Pages with low-quality or thin content may not be indexed as search engines prioritize high-quality, informative content.

- Robots Meta rules: If a page’s robot meta-rules explicitly disallow indexing, search engines will respect these directives and not index the page.

- Technical design: Complex website designs, poor site structure, or technical issues can make indexing difficult.

In essence, search engine indexing is the process by which search engines analyze and categorize web page content. This analysis involves text, tags, attributes, and the determination of canonical pages. The information gathered during indexing is stored in a database, which serves relevant search results to users when they perform search queries. The indexing process plays a crucial role in making web content accessible and discoverable through search engines.

Do pages get removed from the index?

Yes, pages can indeed be removed from a search engine’s index. There are several reasons why this may occur, including:

- HTTP errors (4XX and 5XX): If a URL consistently returns “not found” errors (4XX) or server errors (5XX), it may be removed from the index. This can happen due to various reasons, including accidental removal (such as moving a page without setting up a proper 301 redirect) or intentional removal (deletion of the page with a deliberate 404 error to signal its removal from the index).

- Noindex Meta Tag: Website owners can add a “noindex” meta tag to their pages. This tag instructs the search engine not to include the page in its index. It’s a deliberate action taken by the website owner to prevent a specific page from appearing in search results.

- Manual penalties: In cases where a webpage violates a search engine’s Webmaster Guidelines, it may be manually penalized, leading to its removal from the index. This typically occurs when a website practices against the search engine’s rules, such as spammy tactics or black-hat SEO techniques.

- Crawling restrictions: If a webpage is blocked from being crawled by search engine bots, either through the use of the robots.txt file or password protection, it may not be indexed. Crawling restrictions can prevent search engines from accessing and indexing the content of a page.

It’s important to note that search engines have specific tools and mechanisms to determine which pages should be included or excluded from their indexes. Website owners can also request re-indexing of pages using tools like the URL Inspection tool or “Fetch as Google” to ensure that their content is properly indexed. However, search engines maintain strict guidelines to maintain the quality and relevance of their search results, and any attempts to manipulate the system through deceptive practices may result in pages being removed from the index.

How to tell search engines to index your website

To instruct search engines on indexing your website, you can use various methods and directives to communicate your preferences. The primary methods include Robots Meta Tags and the X-Robots-Tag in the HTTP header:

- Robots Meta Tags (used within the HTML pages):

- Index/noindex: The “index” directive indicates that you want the page to be crawled and included in search engine indexes. Conversely, “noindex” communicates to search engine crawlers that you want the page excluded from search results. Use “noindex” when you want a page to be accessible to visitors but not appear in search results.

- Follow/nofollow: “Follow” instructs search engines to follow the links on the page and pass link equity to those URLs. In contrast, “nofollow” prevents search engines from following or passing link equity through the links on the page. “Nofollow” is often used with “noindex” when you want to prevent indexing and the crawling of links on a page.

- Noarchive: The “noarchive” tag prevents search engines from saving a cached page copy. This is useful, for instance, if you have a site with frequently changing prices and you don’t want searchers to see outdated pricing.

Example of a meta robots tag with “noindex, nofollow”:

<!DOCTYPE html> <html> <head> <meta name=”robots” content=”noindex, nofollow” /> </head> <body>…</body> </html>

Note: You can use multiple robot exclusion tags to exclude multiple crawlers.

- X-Robots-Tag (used within the HTTP header):

- The X-Robots-Tag offers more flexibility than meta tags, allowing you to block search engines at scale. You can use regular expressions, block non-HTML files, and apply sitewide noindex tags. For example, you can exclude entire folders and specific file types or even apply more complex rules using X-Robots-Tag in the HTTP header.

Example of using X-Robots-Tag to exclude certain files or folders:

<Files ~ “\/?no\-bake\/.*”> Header set X-Robots-Tag “noindex, nofollow”</Files>

This example excludes a folder named “no-bake” and its contents from indexing.

It’s essential to understand that these directives primarily affect indexing, not crawling. Search engines must crawl your pages to see and respect these meta directives. To prevent crawlers from accessing certain pages entirely, you should consider using other methods, such as password protection or the robots.txt file.

Additionally, if you’re using a platform like WordPress, ensure that the “Search Engine Visibility” box in your site’s settings is not checked, as this can block search engines from accessing your site via the robots.txt file.

Understanding these methods and directives can help you effectively manage how search engines crawl and index your website, ensuring that your important pages are discoverable by users.

Key takeaways

In 2024, understanding how search engines work is essential for developing a robust SEO strategy. Google, as the dominant search engine with a vast market share, plays a central role in the online landscape. Our exploration of search engines’ foundational principles, indexing processes, and content discovery has shed light on critical factors influencing your online content’s visibility and success.

Search engine algorithms, the linchpin of SEO, aim to deliver relevant, high-quality, and authoritative content to users. These algorithms consider various factors, including relevance, quality, authority, user experience, freshness, personalization, and diversity, to determine search results. By aligning your content with these factors, you can improve its chances of ranking higher in search results.

Content discovery and crawlability are fundamental to SEO success. Ensuring that search engines can find and crawl your important content is a prerequisite for effective optimization. Factors like login forms, search forms, non-text content, clear site navigation, and mobile and desktop navigation all influence how search engines discover your content. Using tools like XML sitemaps and monitoring crawl errors can help maintain optimal crawlability.

The indexing process, a pivotal step following crawling, involves analyzing textual content, content tags, and attributes to categorize web pages. Pages can be removed from the index due to HTTP errors, the noindex meta tag, manual penalties, or crawling restrictions. Careful consideration of these factors can influence whether your content is included in search results.

To instruct search engines on indexing your website, you can use directives such as Robots Meta Tags and the X-Robots-Tag in the HTTP header. These directives allow you to control whether pages are indexed, followed, or archived, providing you with fine-grained control over your content’s visibility.

A comprehensive understanding of how search engines operate in 2024 is essential for developing a successful SEO strategy. By optimizing your content for search engine algorithms, ensuring crawlability and indexability, and using appropriate directives, you can enhance your online content’s visibility, drive organic traffic, and achieve your digital marketing goals. In a landscape where billions of searches occur daily, mastering these aspects is critical for online content creators, businesses, and SEO agencies seeking to thrive.