With the increasing usage of AI in marketing functions (43% of marketing organizations report experimenting with AI), the question of “will AI take over marketing?” no longer stands. The answer is: it already has.

Marketers, I urge you to abandon the mindset of wanting to persist despite AI (I’ve been there too, trust me) and instead adopt a more positive approach; how can I use AI in ways that help me do my job?

We’re now collectively working through which AI platform is our favorite (I’m a ChatGPT and Gemini gal), and how to organize and communicate information effectively to get the result we’re looking for.

One of the first “lightbulb moments” that I had was this: “talking” to AI requires a language of its own; it’s not the same as how I used to Google information, and it’s definitely not the same way I Slack my coworkers (what a world that would be). It’s a whole new beast.

Enter prompt engineering.

What Is Prompt Engineering?

Prompt engineering is the process of precisely building queries or prompts for generative AI models in order to achieve a specific desired result.

I’m willing to bet that you’ve felt the frustration of asking ChatGPT to do something for you and receiving a nonsensical or incorrect response in return. I’ve rage-quit a few ChatGPT discussions in my day; I feel your pain. It’s not only frustrating, it’s a complete waste of your time, and that completely negates the entire reason we learned how to use AI for marketing in the first place: efficiency.

A well-engineered prompt, however, produces the right result, the first time around, eliminating the need to go back and forth (and back and forth again) with the LLM. Lazy marketers be warned, however: prompt engineering requires a little extra lift on your end, but often with a greater payout.

Why Prompt Engineering Matters for Marketers

Put yourself in the shoes of a marketer: let’s call her Daria (no relation). Daria has just completed a grueling SEO audit, resulting in a neat, tidy list of 24 keyword topics that she now has to build a 6-month strategy around. She has her content pillars, her primary and secondary keywords, search volumes, keyword difficulties, and the client’s audience personas on hand. That’s a lot of information; Daria swears she has Ahrefs burned onto the inside of her eyelids at this point.

Tackling that initial lack of inspiration after analytical burnout is no joke, but Daria finds it in herself to open ChatGPT and get to prompt engineering. With the proper prompts, Daria can easily 10x all of this data, turning it into an SEO and content strategy with reasoning behind it, plus some extra tidbits she’ll probably pass off to the Social and Search teams.

Anecdote aside, with solid prompt engineering and a few key data points, your beautiful prompts can be used to:

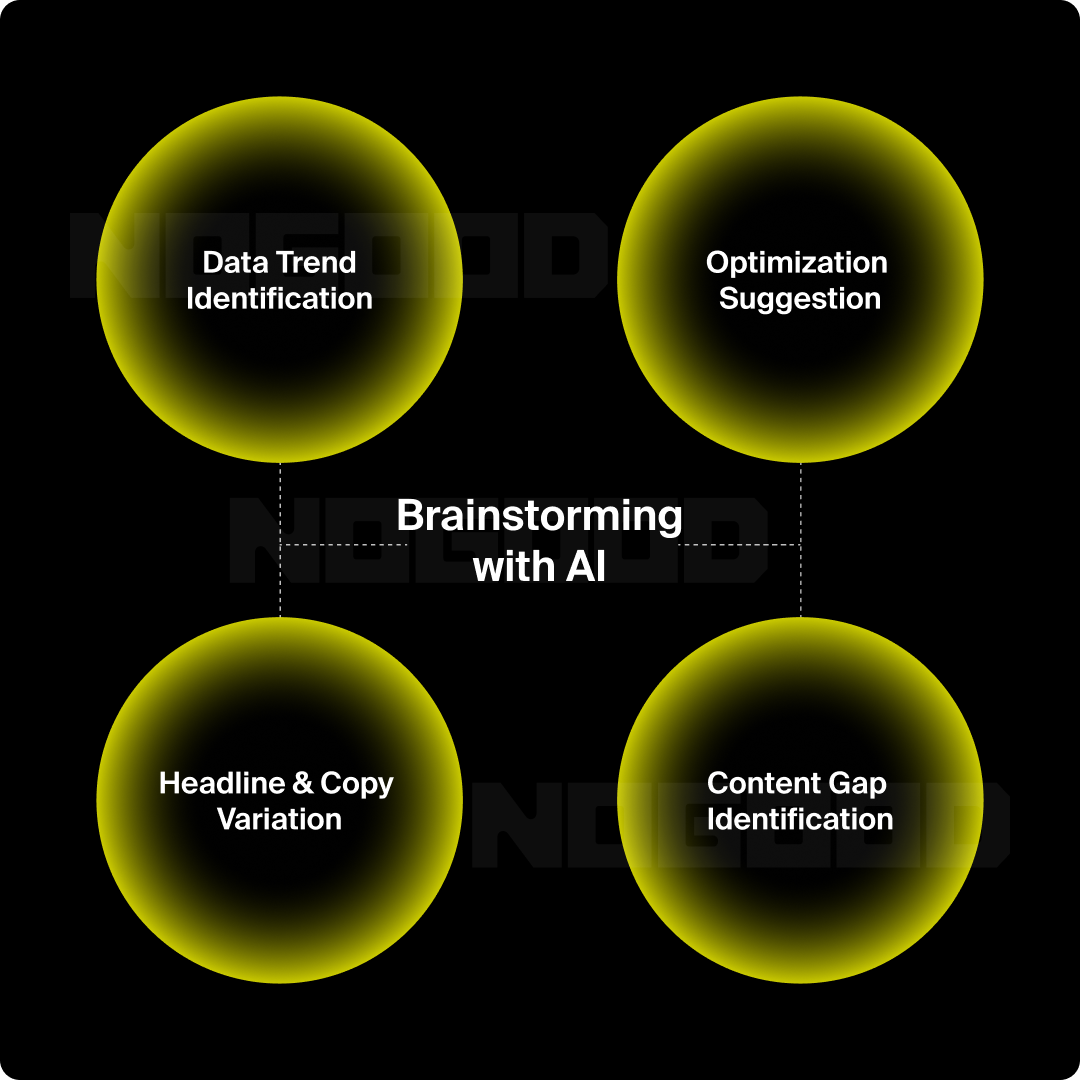

Brainstorm & Ideate

Whether it be an SEO strategy or a Google Ads campaign, ChatGPT can help:

- Spot trends in data that aren’t apparent to a regular human (e.g., multivariate trends)

- Give optimization suggestions based on historical data or seasonality

- Recommend headline variations or CTAs based on audience and intent

- Identify content gaps and slot in identified target keywords to fill them

Pro Tip: Especially when real data is involved, fight the urge to be lazy and start from scratch. You’re setting yourself up for failure that way. Give as much relevant context as you have.

Scale Efficiency

Without proper guidance, scaling with AI is significantly harder. Models that aren’t trained at all produce generic, “AI slop”-type content, and that’s not going to win for your client, or their audience. Prompt engineering ensures you maintain consistency in terms of tone, subject matter, and creativity.

Plus, AI is faster than you (sorry, but it’s true). Know anyone who can generate 50+ variations of a tagline in under two minutes?

Create Brand-Safe Content

AI models aren’t automatically programmed to talk in your (or your client’s) brand voice. With proper prompt engineering, though, marketers can “train” the model to speak according to brand voice guidelines.

If you’re creating a significant amount of content for a client, it saves you a ton of time; you won’t have to repeatedly tell ChatGPT “not to say X” or “always spell Y this way”.

Pro Tip: Brand voice documents or presentations come in handy here. I’ve fed these to Gemini and ChatGPT before, and it significantly decreased the number of slip-ups.

Repurpose Content Effectively

Anyone who has ever worked at an agency knows this scenario well: a great piece of content is created by one team, but there’s a roadblock when it comes to repurposing it. Who’s going to turn this beautiful article into a social media post? Who’s going to create a newsletter that combines three topical pieces and send it out?

AI can, with the right prompt engineering.

Note: If you’ve read literally anything I’ve ever written before, you’ll know how big a proponent I am of the human touch. You can engineer the most efficient, most structured, clearest prompt out there, and there’s still a chance that a mistake will be made. You need to use your genius marketing brain to finalize the output, always.

How Does Prompt Engineering Work?

To understand what it takes to engineer a good prompt, you first need to understand how an LLM interprets prompts. It’s like learning the structure of a new language before you start putting together whole sentences.

How LLMs Interpret Prompts

Though it may seem like they do, language models don’t understand human language the way we understand each other. Rather, they use their massive training datasets and observed learnings to predict the next word or token (unit of text) that a user is about to give them to synthesize an answer.

When you’re asking an LLM for a specific output, mind the phrasing, structure, context, and content of your prompt; they all matter:

- Phrasing: LLMs interpret language both literally and statistically; even the subtlest changes in word choice can lead to drastically different results. Choose your verbs and directives carefully, and write them according to the desired order of operations.

- Structure: Language models respond best to logical, well-ordered prompts, especially when the task at hand involves multiple steps or a complex output. Be sure to use the right infrastructure when engineering your prompt.

- Context: Every new conversation with an LLM is a clean slate, where the only influence is the LLM’s training data. The model doesn’t have any prior knowledge of your brand voice, audience, or goals unless you specifically state them.

- We’ll get into what to do when there’s simply too much context to fit into one prompt in the next section (I’ve been there, trust me).

- Content: Well-engineered complex prompts often include examples, data points, or reference materials that give the LLM more to work with. The general rule of thumb here is that great content in = great content out.

Types of LLM Prompts

Before I show you how to engineer prompts properly, there’s one last thing we need to go over: the types of LLM prompts. Yeah, you heard me right, that’s how deep we are into this; we have different prompt types.

- Zero-Shot Prompting: Asking an AI language model to complete a task with no prior context given; think giving ChatGPT a blog to proofread for spelling and grammar only.

- One-Shot Prompting: Giving an LLM one example and the task at hand, often in the same prompt; think “Here’s a blog I wrote last week, can you write me an Instagram caption for it?”

- Few-Shot Prompting: Providing the language model with a few examples before asking it to perform an action. For example, “I am about to send you the scripts from three videos we filmed last week; can you confirm you understand the content, and then come up with an idea for another on the same topic?”

- Style Transfer Prompting: “Mini-training” the LLM (I don’t think that’s the right name for the concept, but I’m going to run with it); you start a new chat, and feed the model any context it might need to complete the following tasks. Think uploading a brand voice style guide.

- Chain of Thought (CoT) Prompting: Prompting the LLM to show its reasoning or break down its process into several steps to achieve a more refined result; particularly helpful for complex or multi-step tasks.

- Pro Tip: For things like this, I like to separate each “chain link” into one prompt; send one component, confirm that the LLM understands, and then do the same for the rest.

There are a few more types of prompting, but they’re more abstract concepts than the four we reviewed above, and therefore not as relevant to the prompt engineering conversation at hand. I’m not the type to leave stones unturned, though, so here we are:

- Instruction-Based Prompting: Giving a language model specific instructions to follow, providing context where needed. Zero-shot, one-shot, few-shot, and chain-of-thinking prompting all fall into this category of prompting.

- Role-Based Prompting: Telling the LLM to play a certain role, like “B2B SaaS executive,” and asking what features it cares about in a software product.

- Hypothetical Prompting: Exploring a hypothetical scenario with an LLM by immersing it in the situation at hand. This one sometimes requires a good amount of context and “pre-training”, but is particularly useful for audience persona identification and analysis.

- Meta Prompting: Asking a model where your prompts can be improved to achieve a better output. This one feels a little Inception-y, but that doesn’t mean it’s not useful.

- Consistency Prompting: Enhancing the consistency of a model’s outputs with repetitive prompting, almost like practicing. If brand voice is Priority One for you, this could be big.

How to Engineer a Good AI Prompt: 5 Key Techniques for Marketers

Marketers who are down with the sickness (want to produce better content faster, make their lives generally easier, etc. etc.) listen up; here are a few tips and tricks to engineer better prompts:

1. Provide Ample Context

I know it sounds like I’m beating a dead horse (imagine how my coworkers feel), but context is never a bad thing.

I remember when ChatGPT was first released and didn’t have any settings that would identify when it needed more context for outputs. You could ask it to do something with no context and it would scramble to generate some incoherent nonsense. They’ve since implemented a “I need more information” blocker when the prompt completely lacks context.

If you know you’re going to be writing content with the same tone, “mini-train” your model by feeding it the brand voice guidelines; if you know you’re only going to be using it to write meta descriptions for blog posts (I get lazy too, ok?), then contextualize the task by giving the model a “Meta Description Writer” role.

Pro tip for my fellow agency marketers: Use a separate thread or conversation for each client; if you want to get super granular, separate it out by need and client (i.e. one thread for blogs for Client X, and another for social captions for Client X). The more granular you get, the more likely the LLM is to stay on track.

Example Prompt

“Attached are the brand guidelines for Client X. For context, I am an SEO, and I need to write optimized content for this client using their brand voice. Once you have confirmed you understand, I will provide you with the first content brief.”

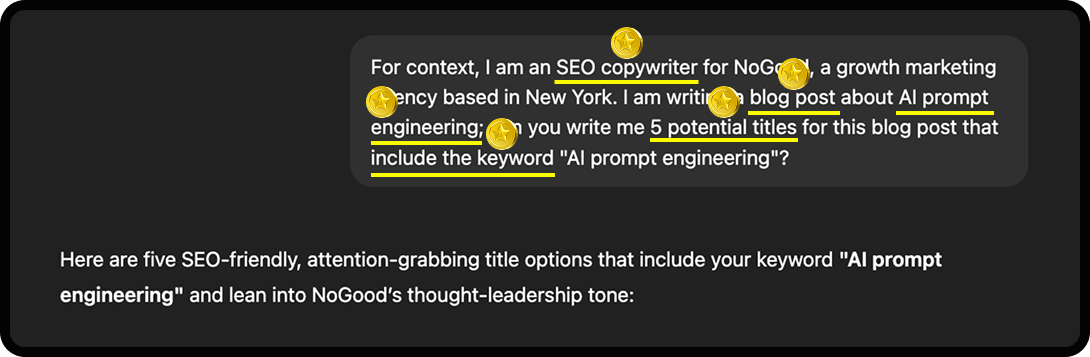

2. Be Specific About Intent

Tell the LLM specifically what the end goal of the prompt is. Being specific about intent is important, but specificity in general will never hurt. I like to think about it similarly to how you would explain what your job is to your best friend of 10 years (who has no Earthly idea what it is that you do): start from the very beginning.

Now, I’m not saying to overexplain (ChatGPT knows what SEO is), but providing context that might seem unnecessary or mundane helps more than you think.

Example Prompt

“I am an SEO working for a leading growth marketing agency. I am writing a blog post about prompt engineering. Can you write me 5 titles for this blog post? The SEO keyword I’m using is “ai prompt engineering” so please be sure to include it in the titles.”

3. Use Formatting to Guide Structure

You wouldn’t send your peers a wall of text to parse through when you need something; the same goes for AI prompts.

Separate information with bullet points, line breaks, and numbered lists to clarify and structure your prompt.

Example Prompt

“I am writing a blog post about AI prompt engineering. Within the blog post is a section about the different types of prompt engineering. Can you write me a 3-5 sentence description for each of them? I am including the following types:

- Zero-shot prompting

- One-shot prompting

- Few-shot prompting

- Style transfer

- Chain of thought prompting”

4. Ask for More Than You Need

One of the biggest upsides to using AI for marketing is being able to create tens, even hundreds of variations for headlines, social media captions, and other pieces of content. Maximize the usefulness of your assuredly well-engineered prompt by asking for more variations than you actually need. If you need five headlines, ask for 10; you’re bound to find five in there that you like.

In my experience, these variation prompts work best with smaller pieces of content. I wouldn’t recommend asking AI to write you 20 versions of an entire blog post (and who wants to sift through that, anyways?); stick to things that are about paragraph-length or less.

Example Prompt

“I am writing a blog post about prompt engineering. Can you write me 15 variations of an introduction that answer the following questions:

- What prompt engineering is

- Why it’s relevant for marketers”

5. Iterate Prompts (& Outputs)

Another huge benefit of using AI for brainstorming and ideation is the opportunity to iterate infinitely; AI doesn’t experience creative burnout like we do.

Example Prompt

“I like the third headline variation you wrote. Can you use that headline idea and the voice guidelines I gave you to write me 3 similar ones?”

Tools & Resources for Prompt Engineering

As with most skills, truly mastering AI prompt engineering requires practice and an element of “feel”. To get you started on your AI prompt engineering journey, I thought I’d drop a couple of resources that might be helpful:

- Prompt libraries like Anthropic’s, FlowGPT, or PromptHero

- Courses and certifications like Coursera, HubSpot Academy, or Udemy

- Prompt testing sandboxes like PromptSandbox.io are a great way to practice engineering AI prompts in an isolated environment

Common Mistakes in AI Prompt Engineering

The possibilities may seem limitless, but you have to remember that AI isn’t all-powerful; it is a machine, at the end of the day. Here are a few things to watch out for when engineering AI prompts:

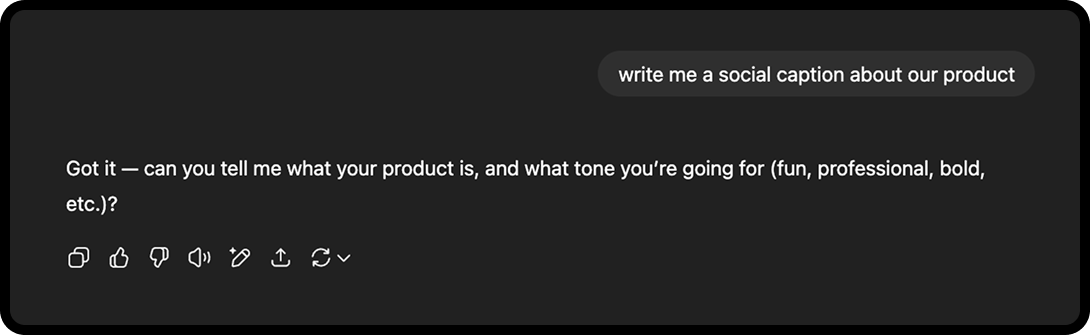

- Being Too Vague: Giving the AI the bare minimum direction and “seeing if it will be able to figure it out”. If you’re following the tips above, this shouldn’t be an issue. The main warning I’m trying to give here is not to be lazy.

- Overloading: Context exists on a spectrum; it’s possible to give too little, and too much. Giving too much context, especially when irrelevant or unrelated information bleeds in, can confuse the model.

- Forgetting Priority: Avoid scattered or incoherent prompts. When you’re writing your prompt, read it back and ask yourself: Does it make sense? If my coworker or manager were to send me this task, would I know what to do?

- Using Overly Complex Language: This doesn’t apply to industry terminology, obviously, but keep the instructional part of your prompts fairly simple in terms of language. AI interprets prompts extremely literally, so this wouldn’t be the time to dust off the old thesaurus.

- Not Setting Boundaries: You can specify the purpose of the output all you want, but don’t forget to also set specific boundaries for length, structure, format, and word count. An LLM doesn’t know the ideal word count for an Instagram caption, especially according to your brand guidelines.

Final Thoughts

Marketing is a wide domain with highly varying needs. Whether you’re using AI to draft the outline of a blog post, write a social media caption, create a video script, or refine CSS for your website, make sure that the prompts you’re giving set you up for success in terms of a high-quality output.

Thanks for sharing this, nice post.