You’ve heard it from every angle of the SEO world in the past two years: SEO is changing. And yes, that’s true. But does that mean that the technical audits we used to meticulously run with the help of Screaming Frog and Ahrefs are a thing of the past? Not at all. We just need to adapt them for a new world of search.

In this technical SEO checklist, we’ll delve into all of the areas most important for smooth website performance. You might be surprised by how many pieces still remain relevant (or become even more relevant) for optimized performance in 2026.

If you’re a marketer, developer, or business owner, this guide will give you a framework to evaluate your site’s technical health and set a clear path toward long-term SEO and AEO success moving into the future.

The Core Pillars of Technical SEO

When most people think about SEO, they jump straight to keywords and content. But without a strong technical foundation, even the best-written pages won’t rank; or worse, they won’t be discovered by search engines at all. Technical SEO is the scaffolding that supports every other SEO effort, from on-page optimization to link building.

SEO can be divided into four categories:

- Technical SEO

- On-Page SEO

- Off-Page SEO

- Local SEO

Technical SEO holds the key to all other forms of SEO. If your site has crawl errors, duplicate content issues, or sluggish load times, search engines may never fully index your pages; and no amount of keyword targeting will fix that.

Here are the most important pieces for technical AEO in 2026:

- Crawlability & Indexation: Making sure search engine bots can efficiently discover and prioritize your pages.

- Performance & Core Web Vitals: Delivering a fast, seamless experience that meets Google’s quality thresholds.

- Mobile-First & Multi-Device Optimization: Designing with mobile users in mind while ensuring parity across all devices.

- Site Architecture & Internal Linking: Creating a logical hierarchy that distributes link equity and reinforces topical relevance.

- Structured Data & Index Hygiene: Using schema and canonicalization to help search engines interpret your content correctly.

Each of these pillars feeds into visibility, rankings, and user satisfaction. In the sections ahead, we’ll break down exactly how to audit, optimize, and future-proof each one for lasting SEO success.

Crawlability & Indexation

If search engines can’t find your content, they can’t rank it. Crawlability and indexation are the bedrock of technical SEO; ensuring that bots from Google, Bing, and other engines can efficiently navigate your site, understand your structure, and decide which pages deserve to appear in search results.

Action Items Checklist

- Optimize Your XML Sitemap

- Exclude duplicate, redirected, or noindex pages.

- Submit to both Google Search Console and Bing Webmaster Tools.

- Use sitemap segmentation (e.g., blogs vs. products) for large sites to spot issues faster.

- Refine Your Robots.txt

- Block crawl traps (e.g., endless calendar pages, session IDs).

- Allow critical assets like JavaScript and CSS to ensure proper rendering.

- Test changes with Google’s Robots Testing Tool before deploying.

- Implement Canonicalization

- Use canonical tags to consolidate duplicate or similar URLs.

- Standardize on a preferred domain (HTTPS, www vs. non-www, trailing slash consistency).

- Avoid conflicting signals between canonical tags, redirects, & sitemaps.

- Audit with Log File Analysis

- Analyze server logs to see how bots actually crawl your site.

- Identify patterns of crawl waste (e.g., bots spending time on unimportant parameters).

- Prioritize your high-value pages and ensure they’re being crawled regularly.

- Fix Crawl Errors & Redirect Chains

- Monitor Search Console’s Coverage and Page Indexing reports.

- Resolve 404s, broken internal links, and redirect loops.

- Use 301 redirects sparingly and avoid long chains that slow down crawlers.

Think of crawlability as your site’s highway system: search engines are the cars, and your XML sitemap, robots.txt, and internal links are the road signs. If the signage is confusing (or if there are too many detours) traffic won’t flow to your most important destinations.

For answer engines, you can add an LLMs.txt file. It’s similar to robots.txt, but specifically designed for AI crawlers powering generative search and conversational agents. While robots.txt controls indexing for traditional search engines, llms.txt helps manage visibility and attribution within AI-driven experiences.

Site Performance & Core Web Vitals

Speed and stability are no longer “nice-to-haves” in SEO; they’re prerequisites. In 2026, Google and Bing still rely heavily on Core Web Vitals as key signals of user experience, and AI-driven search features increasingly favor fast-loading, seamless sites that keep visitors engaged. The same is true for LLMs. Performance issues don’t just cost rankings; they increase bounce rates, lower conversions, and diminish trust.

Key Metrics: Largest Contentful Paint (LCP), Interaction to Next Paint (INP), Cumulative Layout Shift (CLS).

|

Metric |

What It Measures |

Target Time |

|---|---|---|

|

LCP |

Measures loading performance |

Under 2.5 seconds |

|

INP |

Evaluates responsiveness by measuring how quickly the page reacts to user actions |

Under 200ms |

|

CLS |

Measures visual stability |

Under 0.1 second |

Action Items Checklist

- Optimize Server Response Times

- Invest in reliable hosting and CDNs.

- Configure caching at server and browser levels.

- Monitor TTFB (Time to First Byte) with tools like PageSpeed Insights or WebPageTest.

- Modernize Media Delivery

- Serve next-gen image formats (WebP, AVIF).

- Implement responsive images (srcset) for different devices.

- Defer offscreen or non-critical images with lazy loading.

- Reduce Render-Blocking Resources

- Minimize JavaScript execution and bundle size.

- Use asynchronous loading (async/defer) for scripts.

- Inline critical CSS and defer the rest.

- Monitor Dynamic & Interactive Content

- Test INP regularly (slow scripts, third-party widgets, or heavy animations can drag responsiveness down).

- Audit your site on both mobile and desktop with Core Web Vitals reports in Google Search Console.

- Prevent Visual Instability

- Set explicit width and height attributes for images and embeds.

- Avoid injecting ads, banners, or pop-ups without reserving layout space.

- Test CLS across device sizes, especially mobile.

Mobile-First & Multi-Device Optimization

Mobile-first indexing is no longer new; it’s the standard. But in 2026, the conversation has shifted from simply “does your site work on mobile?” to “is your site optimized for all device experiences?” With search engines crawling mobile versions of websites as their primary reference point, and users increasingly browsing across tablets, foldables, and even voice and AR interfaces, multi-device optimization is a core technical SEO priority.

Why Mobile-First Matters in 2026

- Indexing Priority: Google predominantly uses the mobile version of a site for crawling and ranking. If your desktop and mobile experiences differ, the mobile one takes precedence.

- User Experience: Over 60% of global searches still come from mobile devices, and bounce rates skyrocket if content isn’t formatted properly for small screens.

- AI Search Interfaces: Generative results are often delivered in “mobile-first” design formats. If your mobile pages underperform, your visibility in AI summaries may be compromised.

Action Items Checklist

- Responsive Web Design

- Use fluid grids, flexible images, and CSS breakpoints.

- Test across multiple screen sizes, including foldables and tablets, not just standard smartphones.

- Content Parity

- Ensure mobile versions contain the same core content, structured data, and metadata as desktop.

- Avoid “stripped-down” mobile pages that hide important sections.

- Mobile Performance

- Monitor Core Web Vitals specifically for mobile in Search Console.

- Optimize fonts, buttons, and interactive elements for touch input.

- Reduce mobile payload sizes; heavy scripts or oversized images hit mobile users hardest.

- Visual Stability

- Test CLS (Cumulative Layout Shift) on smaller viewports where ads, pop-ups, or sticky headers can cause instability.

- Reserve space for embeds, videos, and dynamic content to prevent shifting.

- Accessibility & Usability

- Check color contrast, text size, and tap target spacing.

- Make navigation menus simple, collapsible, and thumb-friendly.

- Consider dark mode support, which is increasingly expected by users.

Don’t just test with browser emulators, use real devices. A page that looks perfect on Chrome DevTools might break on Safari or a mid-range Android. Regular multi-device QA is now a must-have part of every technical SEO audit.

Site Architecture & Internal Linking

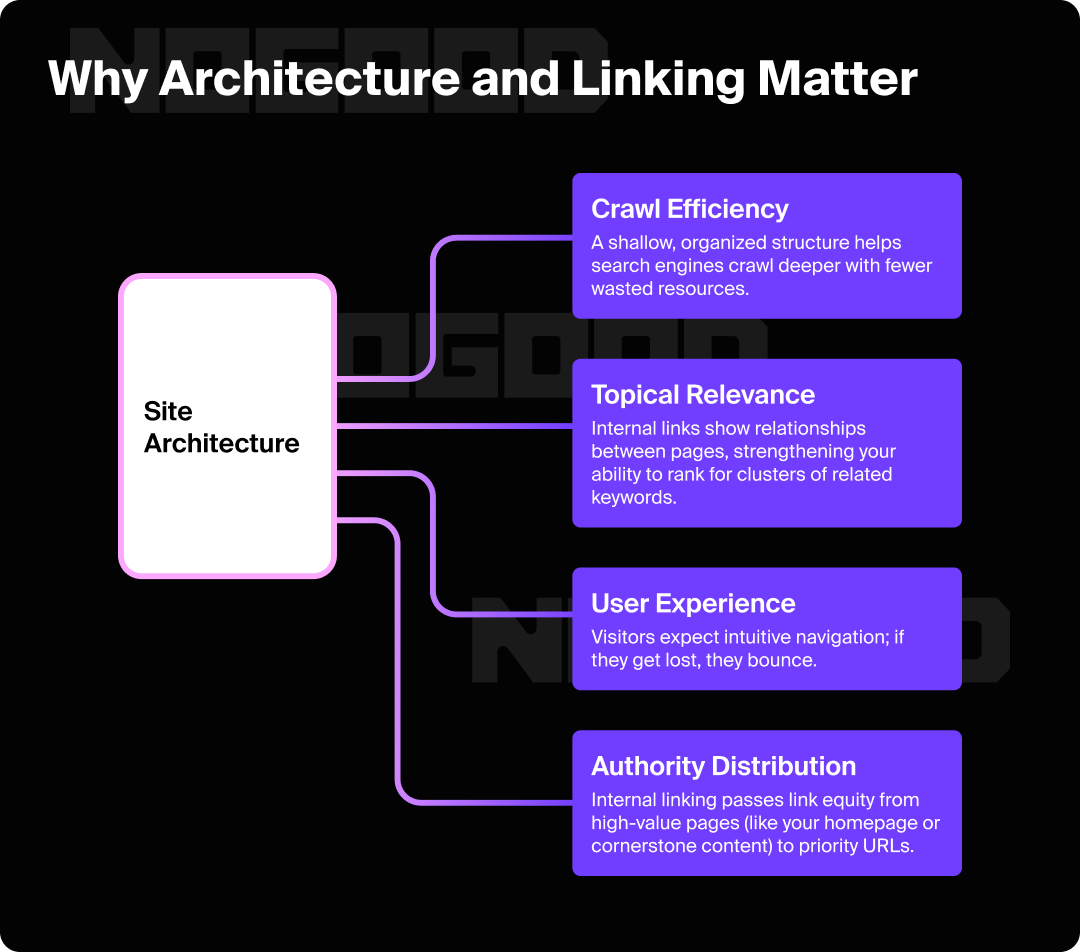

A logical, well-structured site architecture ensures that both users and bots can find the most important content quickly, while an intentional internal linking strategy distributes link equity and reinforces topical authority.

In 2026, this is more critical than ever. AI-powered search relies on clear signals of context and hierarchy, and messy site structures can prevent your most valuable pages from being surfaced in generative results.

Why Architecture & Linking Matter

- Crawl Efficiency: A shallow, organized structure helps search engines crawl deeper with fewer wasted resources.

- Topical Relevance: Internal links show relationships between pages, strengthening your ability to rank for clusters of related keywords.

- User Experience: Visitors expect intuitive navigation; if they get lost, they bounce.

- Authority Distribution: Internal linking passes link equity from high-value pages (like your homepage or cornerstone content) to priority URLs.

Action Items Checklist

- Design a Logical Hierarchy

- Use a flat structure where most content is no more than 3–4 clicks from the homepage.

- Organize content into topic clusters, with hub pages linking to subtopics.

- Maintain consistent URL patterns that reflect hierarchy (e.g., /services/aeo/technical/).

- Build Strong Internal Linking

- Add contextual links between related articles and resources.

- Ensure every page has at least one internal link pointing to it—avoid orphan pages.

- Use descriptive anchor text that signals relevance (avoid generic “click here”).

- Fix Broken & Redirected Links

- Audit regularly for broken internal links (404s).

- Replace links pointing to redirected pages with direct destinations to reduce crawl waste.

- Leverage Navigation & Breadcrumbs

- Implement breadcrumbs with schema markup to aid crawlers and users.

- Ensure top-level categories and hubs are easily accessible from the main menu.

- Balance Depth vs. Breadth

- Large sites may need deeper structures, but prioritize clear paths to high-value pages.

- Avoid “infinite scroll” or JavaScript-heavy navigation that crawlers struggle to interpret.

Content Duplication & Index Hygiene

With AI-powered search engines pulling from indexed pages to generate summaries, duplication doesn’t just waste crawl budget; it can confuse algorithms about which version to surface, weaken authority signals, and even exclude your content from AI overviews altogether.

Index hygiene is about ensuring that the right pages get indexed while thin, outdated, or duplicative ones are excluded. In 2026, this is vital for both SEO and AEO. Without it, you risk diluting your site’s relevance, spreading link equity too thin, and undermining user trust.

Why Duplication & Index Hygiene Matter

- Algorithm Clarity: Search engines need clear signals about which page to rank. Conflicting versions muddy the waters.

- Authority Consolidation: Duplicate or thin pages can sap ranking power from your strongest assets.

- User Trust: Surfacing outdated, staging, or boilerplate content hurts brand credibility.

- AI Summaries: Generative AI results favor clean, authoritative pages; duplication can keep your content out of those answers.

Action Items Checklist

- Canonicalization Consistency

- Use canonical tags to identify the preferred version of each page.

- Align canonical tags with redirects and XML sitemaps to avoid mixed signals.

- Standardize domain variants (HTTPS, www vs. non-www, trailing slash).

- Manage Dynamic & Faceted URLs

- Configure parameter handling in Google Search Console.

- Block unnecessary variations (filters, tracking tags, sessions) in robots.txt.

- Consolidate category and product variants where possible.

- Audit Thin & Low-Value Pages

- Identify pages with little to no unique content.

- Merge, expand, or prune content that doesn’t serve users or search engines.

- Remove duplicate tag/category archives in CMS platforms.

- Control Indexation

- Use noindex strategically on staging environments, internal search pages, or duplicate templates.

- Ensure “noindex” pages are also excluded from sitemaps.

- Periodically review indexed URLs in Search Console to spot anomalies.

- Monitor Content Updates

- Keep high-value evergreen pages fresh with updated information.

- Redirect or consolidate outdated content instead of letting it linger.

Structured Data & Rich Results

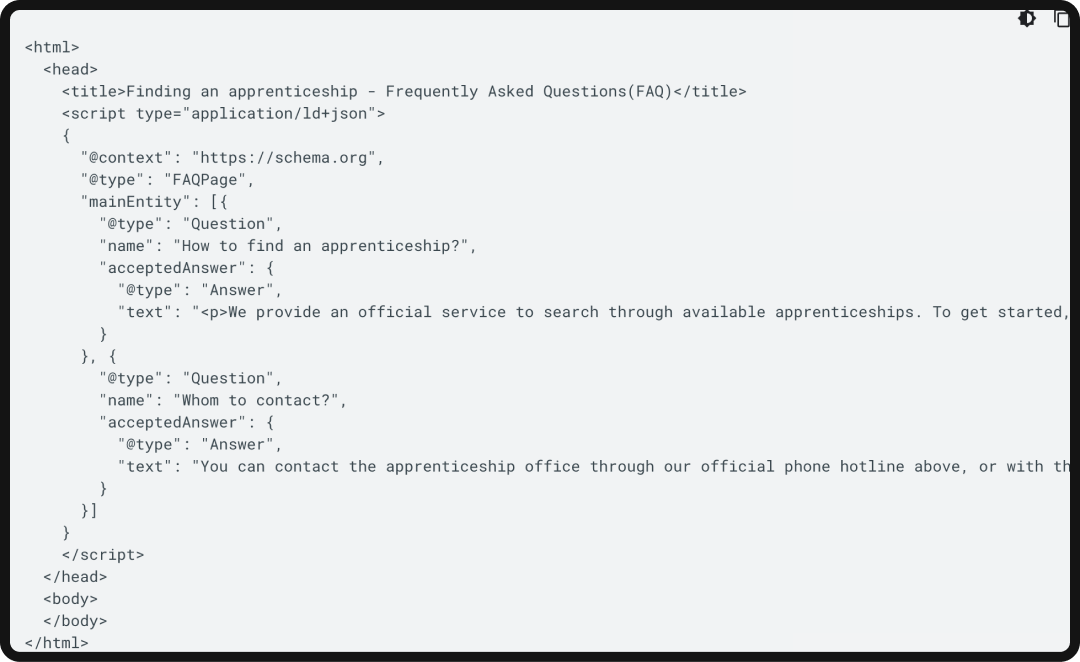

Search engines are no longer just indexing text; they’re parsing context. In 2026, structured data is one of the most important technical SEO levers for helping crawlers understand your content, categorize it correctly, and feature it in AI overviews and rich SERP elements. Without schema markup, your content risks being overlooked in favor of competitors who provide machine-readable clarity.

Rich results (like FAQs, product carousels, and featured snippets) not only increase visibility but also improve click-through rates by making your listing more engaging and informative.

Why Structured Data Matters in 2026

- AI-Driven Search: Generative engines lean heavily on structured data to summarize and attribute answers.

- SERP Differentiation: Schema can secure enhanced listings, drawing attention even when rankings are similar.

- Index Precision: Structured data helps search engines interpret page purpose and reduces ambiguity.

- Future-Proofing: As SERPs evolve into multimodal experiences (text, video, shopping, voice), schema provides the hooks needed to stay competitive.

Action Items Checklist

- Implement Schema Markup

- Use schema for articles, products, FAQs, local business info, events, and reviews where relevant.

- For ecommerce, prioritize Product, Offer, and Review schema.

- For B2B and lead-gen sites, leverage Organization, Service, and FAQPage.

- Validate Your Markup

- Test with Google’s Rich Results Test and Schema.org validator.

- Fix warnings and errors before deploying.

- Revalidate after every major site update.

- Monitor Rich Result Eligibility

- Use Search Console’s “Enhancements” reports to track schema coverage.

- Watch impressions and clicks for structured snippets; measure CTR changes when a new schema is implemented.

- Align With Content

- Ensure schema matches what’s on the page. Misleading markup (e.g., fake reviews) can trigger penalties.

- Keep structured data updated alongside content. Outdated schema is as harmful as outdated copy.

- Leverage Emerging Types

- Experiment with new schema types relevant to 2026, such as Speakable (voice search), HowTo, or VideoObject.

- Consider multimodal signals for AI summarization, such as marking up podcasts, tutorials, or interactive elements.

Analytics, Monitoring & Reporting

A technical SEO (or AEO) audit isn’t complete once the fixes are implemented; it only works if you can measure impact, monitor changes, and catch issues before they snowball. In 2026, analytics and reporting have become more complex.

With AI-driven search altering traffic patterns and privacy regulations reshaping analytics tools, marketers need a robust measurement framework that goes beyond surface-level metrics.

Why Monitoring Matters

- Search Engines Change Constantly: Algorithm updates, SERP redesigns, and new AI overviews can shift visibility overnight.

- Performance Tracking: Without measuring Core Web Vitals, crawl rates, and indexation over time, you won’t know whether fixes are holding up.

- Business Alignment: SEO isn’t just about rankings; it must tie back to conversions, revenue, and ROI.

Action Items Checklist

- Use Search Console & Bing Webmaster Tools

- Monitor Index Coverage, Page Experience, and Core Web Vitals reports.

- Track impressions, clicks, and CTR for priority pages.

- Watch for sudden drops in indexation, which can indicate crawl errors or penalties.

- Leverage Third-Party Audit Tools

- Tools like Ahrefs Webmaster Tools, Screaming Frog, SEMrush, and Sitebulb provide deeper crawl and technical diagnostics.

- Set up recurring crawls to catch regressions after site changes.

- Implement Server Log Monitoring

- Analyze how bots are actually crawling your site versus how you expect them to.

- Spot crawl waste (bots spending time on unimportant URLs).

- Track crawl frequency of your highest-value pages.

- Build Custom Dashboards

- Connect GA4, Search Console, and third-party SEO APIs into Looker Studio or other BI tools.

- Report on technical KPIs: indexation rate, error pages, Core Web Vitals performance, crawl distribution.

- Layer in business metrics like conversion rates and revenue impact.

- Set Up Alerts

- Use tools to notify your team when Core Web Vitals slip, crawl errors spike, or indexation drops.

- Monitor server uptime and response times with automated alerts.

- Set up anomaly detection in Google Search Console so you can know if something went wrong right away.

Localization & Emerging Considerations

As search evolves, new Technical SEO requirements emerge, especially around localization, AI-driven indexing, and multimodal SERPs. In 2026, optimizing for global and local audiences isn’t just about translation; it’s about ensuring your site’s technical foundation supports diverse languages, regions, and device experiences.

At the same time, the rise of AI crawlers and generative search means sites must prepare for engines that consume, interpret, and summarize content differently than traditional bots.

Why It Matters in 2026

- Global Reach: Even small businesses now attract international audiences, and poorly localized setups can block visibility.

- AI Crawlers: Search engines and third-party AIs are indexing in new ways, often preferring structured and well-architected content.

- SERP Shifts: Voice search, visual search, and multimodal results mean technical optimization extends beyond text-based pages.

Action Items Checklist

- Localization & International SEO

- Implement hreflang tags correctly to signal language and regional targeting.

- Avoid duplicate content issues across localized versions.

- Create city-specific service pages where relevant for local businesses.

- Ensure hosting/CDNs deliver fast response times in target regions.

- Prepare for AI-Driven Search

- Audit how your content appears in AI overviews (Google, Bing, Perplexity).

- Provide clean schema markup, FAQ content, and structured answers to common questions.

- Monitor traffic shifts from AI-generated answers versus traditional organic clicks.

- Support Multimodal Search

- Optimize media with structured data (VideoObject, ImageObject, Podcast).

- Add transcripts and captions to audio/video assets for accessibility and indexation.

- Ensure images and videos use descriptive alt text and filenames for visual search engines.

- Compliance & Accessibility

- Meet evolving web standards (WCAG updates, ADA compliance).

- Factor in regional privacy laws (GDPR, CCPA, and emerging AI regulations) when setting up analytics and tracking.

Step-by-Step: How to Run a Technical SEO Audit

A technical SEO audit isn’t just a box-ticking exercise. It’s a structured process to uncover issues, prioritize fixes, and strengthen the foundation of your site. In 2026, the audit must account for both traditional search engine requirements and emerging AI-driven discovery models.

Here’s a streamlined workflow you can follow:

Step 1: Crawl Your Site

- Use a tool like Screaming Frog, Sitebulb, or SEMrush to perform a full crawl.

- Identify broken links, redirect chains, duplicate title tags, and thin pages.

- Compare crawl output against your XML sitemap to spot missing or orphaned URLs.

Step 2: Check Indexation

- Review Google Search Console and Bing Webmaster Tools for index coverage.

- Audit which URLs are indexed vs. excluded (look for staging, test, or low-value pages slipping in).

- Verify canonical tags and noindex directives align with your indexation strategy.

Step 3: Evaluate Performance & Core Web Vitals

- Run diagnostics with PageSpeed Insights, Lighthouse, and WebPageTest.

- Track LCP, INP, and CLS on both mobile and desktop.

- Identify high-priority templates (home, category, product, blog) for optimization.

Step 4: Assess Mobile & Multi-Device Experience

- Test with Google’s Mobile-Friendly Test and real devices.

- Check for content parity between mobile and desktop.

- Ensure navigation, forms, and interactive elements are touch-friendly.

Step 5: Review Site Architecture & Internal Linking

- Map your hierarchy; make sure priority pages are within 3-4 clicks of the homepage.

- Check for orphan pages and thin tag or category archives.

- Audit anchor text for internal links to ensure topical relevance.

Step 6: Audit Content Duplication & Index Hygiene

- Look for parameterized URLs, session IDs, and duplicated product or category pages.

- Ensure canonical tags and redirects are consistent.

- Consolidate or prune thin, outdated, or redundant pages.

Step 7: Validate Structured Data

- Run Rich Results Test on key templates (articles, products, FAQs, organization schema).

- Fix warnings and errors before publishing.

- Monitor enhancement reports in Search Console for coverage.

Step 8: Analyze Server Logs

- Confirm how often bots are crawling your most valuable pages.

- Spot crawl waste on low-value or duplicate URLs.

- Adjust robots.txt or internal linking to guide crawl priorities.

Step 9: Set Up Monitoring & Dashboards

- Connect GA4, Search Console, and SEO tools into Looker Studio or other BI platforms.

- Track crawlability, Core Web Vitals, indexation, and CTR regularly.

- Establish alerts for sudden drops in traffic or performance regressions.

Step 10: Prioritize & Document Fixes

- Not all issues are equal; focus on those with the highest impact on visibility and user experience.

- Create a clear action plan with owners, timelines, and expected outcomes.

- Re-crawl and re-test after fixes to confirm improvements.

Run a full technical audit at least twice a year, but maintain ongoing monitoring. The most successful SEO teams treat auditing as a continuous feedback loop, not a one-time project.

FAQs: Technical SEO Checklist 2026

What’s included in technical SEO?

Technical SEO covers the behind-the-scenes optimizations that help search engines crawl, index, and understand your website. This includes site speed, mobile optimization, XML sitemaps, robots.txt, structured data, canonicalization, internal linking, and overall site architecture.

What are the 4 types of SEO?

SEO is generally divided into four categories:

- Technical SEO: Ensuring your site is crawlable, indexable, and optimized for performance.

- On-page SEO: Optimizing content, metadata, and keyword targeting.

- Off-page SEO: Building backlinks and external authority signals.

- Local SEO: Optimizing for location-specific queries and map results.

How do you do a technical SEO audit?

A technical SEO audit involves systematically checking your site’s crawlability, indexation, performance, and structure. The process includes:

- Crawling your site with an audit tool.

- Reviewing index coverage in Search Console.

- Testing Core Web Vitals and mobile usability.

- Auditing site architecture, internal linking, and duplication.

- Validating structured data.

- Monitoring logs and analytics for crawl efficiency and performance.

The goal is to identify errors, prioritize fixes, and strengthen the site’s technical foundation.

Why is technical SEO important in 2026?

In 2026, technical SEO is essential because search engines and AI-driven platforms demand fast, stable, and well-structured sites. Without a solid technical foundation, even the best content may fail to rank or be excluded from AI-generated overviews. Technical SEO ensures both humans and bots can easily navigate, understand, and trust your site.

![Cover image for the blogpost: "Top 10 Answer Engine Optimization (AEO) Tools in 2025 [Ranked]"](https://nogood.io/wp-content/uploads/2025/05/NG_0414_TopAEOToolsToBoostYourAISearchVisibility_Cover_1600x800-700x350.png)