Search is no longer just a text box.

People are now searching with images, voice commands, screenshots, videos, and even gestures; often in combination. Welcome to the era of multimodal search, where users interact with AI-powered engines using whatever mode makes sense in the moment.

For marketers, this changes the game. It’s not enough to optimize for keywords or rank in blue links. You need to think about how your brand shows up when someone uploads a product photo, records a voice query, or drops a video into a search bar.

In this guide, we’ll break down what multimodal search is, why it matters, how it works in the real world, and most importantly, how to optimize your content and strategy to stay discoverable as AI reshapes search as we know it.

What Is Multimodal Search?

Multimodal search is a search experience allowing users to generate a query using multiple types of input, like text, images, voice, and even video; at the same time or in combination. Instead of relying solely on keywords, multimodal search understands the context and meaning behind different data types, delivering more accurate and relevant results.

What does this look like in action? Here’s an example:

Instead of typing “red floral dress,” a user could upload a photo of a dress they like and ask, “Do you have something like this in the color red and a midi length?” That’s multimodal.

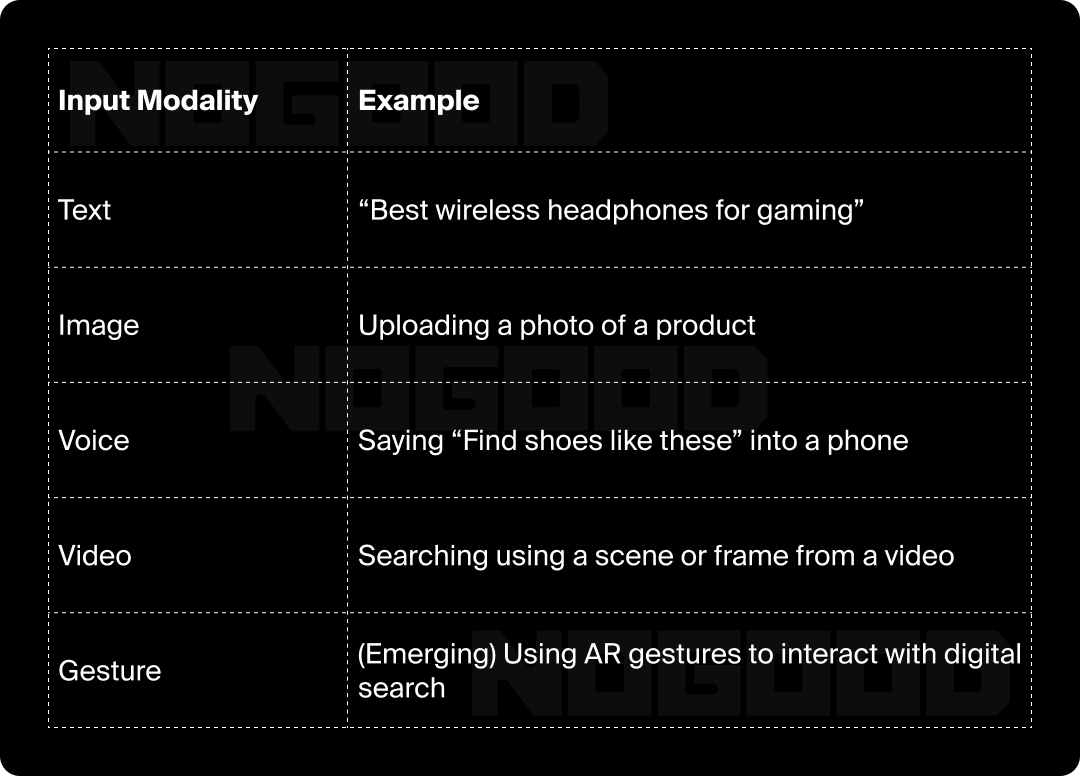

Putting the “Multi” in Multimodal

“Multimodal” refers to different modes of communication or data input. In the context of search, the most common include:

Search engines or AI models trained in multimodal understanding can process and connect these inputs to return smarter, richer, and more personalized results.

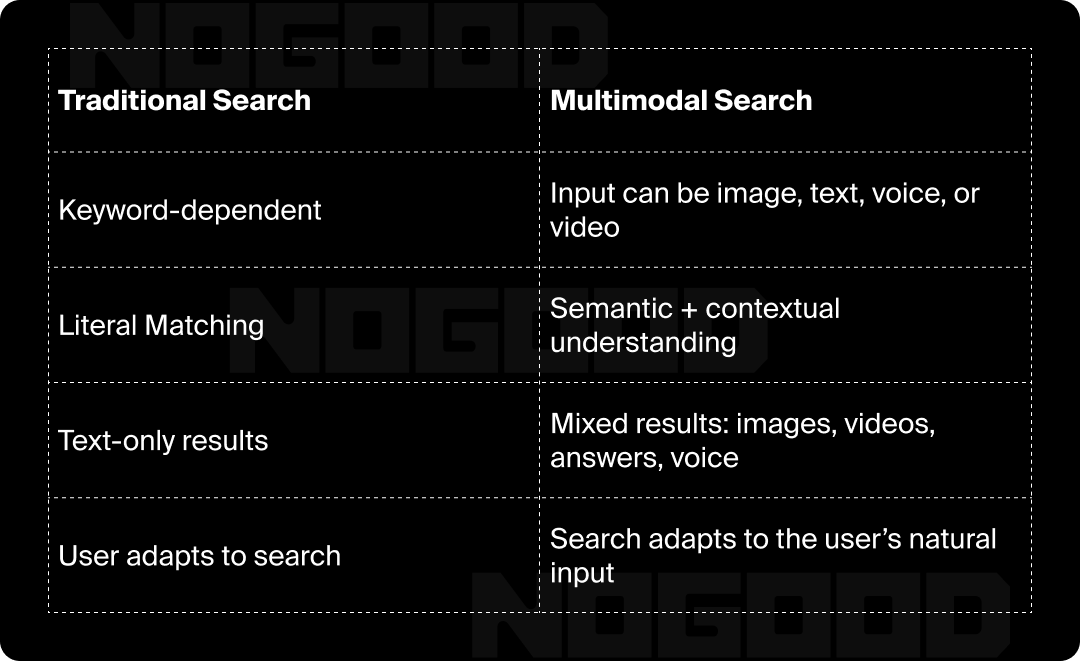

How Is Multimodal Search Different From Traditional Search?

So, what makes multimodal search different? Well, if it isn’t already obvious, multimodal search goes above and beyond what a basic search engine can do. Multimodal search leverages machine learning to interpret visual, auditory, and other non-text signals; with traditional search, engines rely on good ol’ fashioned text-based input and keyword matching.

Here’s a quick comparison:

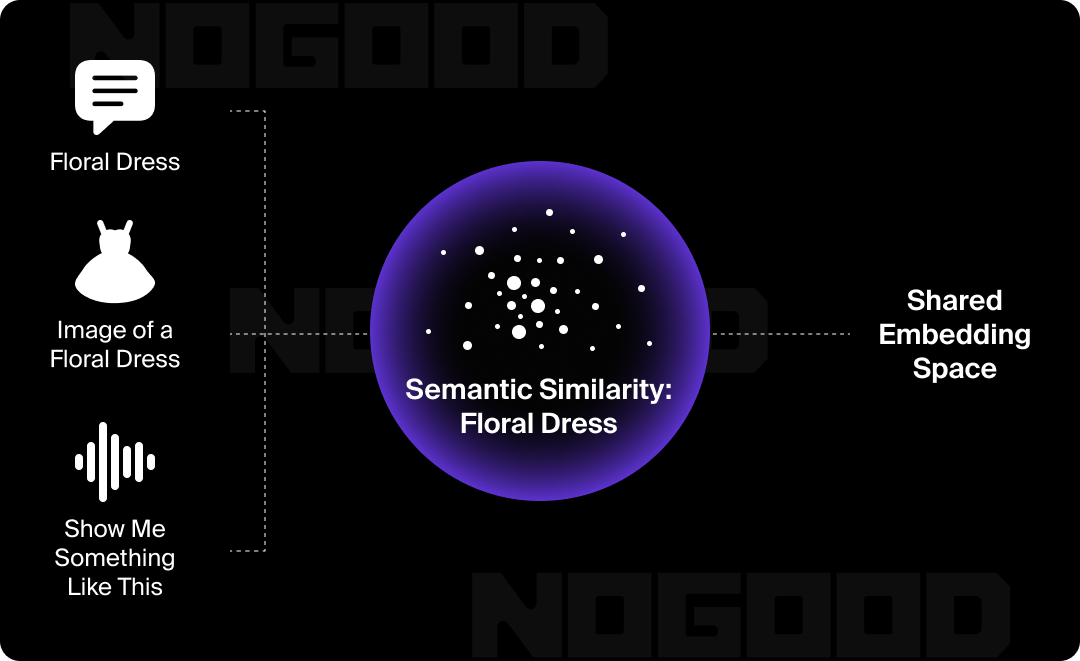

Multimodal search models work by creating a shared “embedding space” where different types of input (a photo, a voice prompt, or a written question) can be compared based on meaning, not format.

Why It Matters

Tools like Google Lens, TikTok Visual Search, and Amazon’s Titan Multimodal Embeddings are redefining how people search and discover products, content, and information.

As AI continues to evolve, users will increasingly expect search experiences that understand them as intuitively as a human would, across images, sounds, language, and even movement.

What Is Multimodal Search in AI?

Multimodal search in AI refers to the use of artificial intelligence models that can understand and connect information across different types of inputs, like text, images, and audio, by translating them into a shared semantic space. This means AI can take a photo, a voice prompt, or a block of text and interpret the meaning behind each, allowing it to deliver unified and contextually relevant results.

In simple terms, AI doesn’t just see a photo as pixels; it understands it as “a red floral dress” and can connect that to spoken or written queries, like “show me similar dresses in a midi length.”

How It Works: The Role of Embeddings

To understand the multiple input types that exist, AI uses something called embedding models. These models convert data, whether it’s a sentence, image, or sound, into mathematical representations called vectors. These vectors live in a shared space, allowing AI to compare different inputs based on their meaning, not just their format.

All inputs are mapped into the same multimodal vector space, allowing the AI to retrieve results that are semantically similar, even if the search input and content format don’t match.

Joint Embeddings vs. Single-Modality Models

With traditional search, separate models might process images and text independently. But multimodal AI models, like Contrastive Language–Image Pretraining (CLIP) or Titan Multimodal Embeddings, learn to understand relationships between modalities in the same model.

This is what allows:

- Google Lens to show you results based on both the image and your follow-up text.

- Amazon Bedrock to power voice + image product discovery.

- TikTok to deliver results when users search with both visuals and captions.

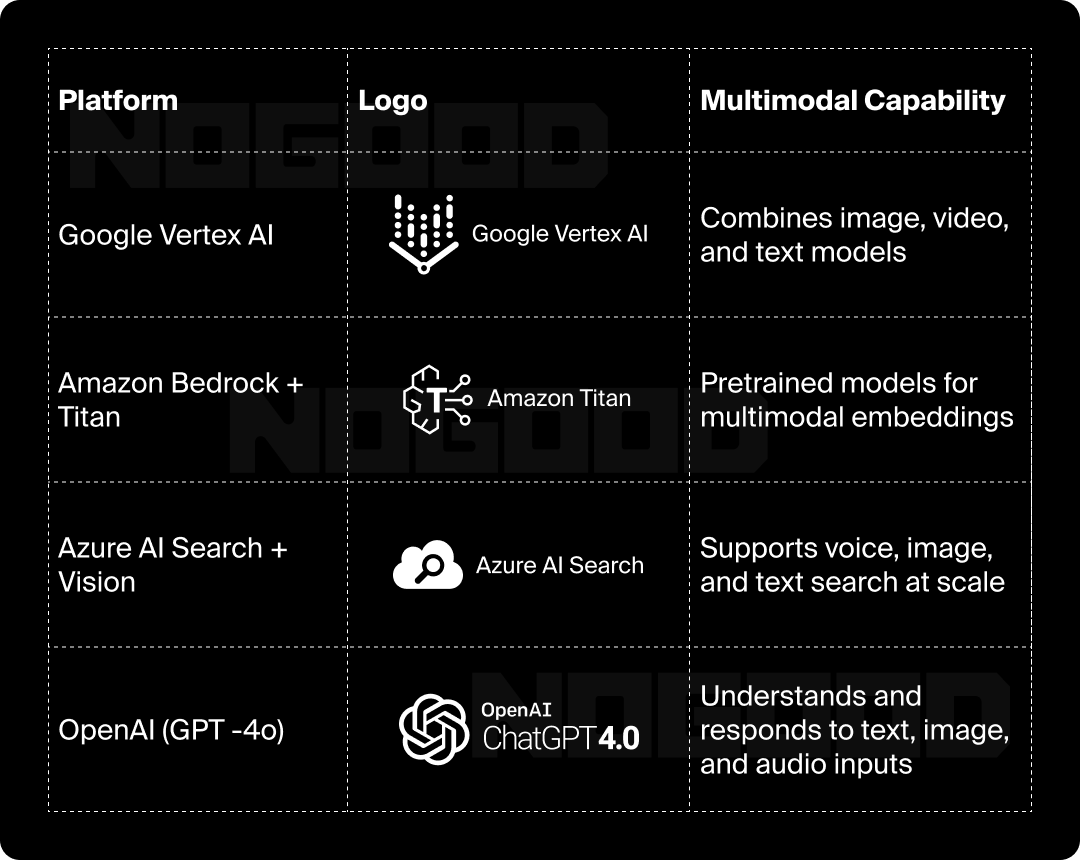

Tools & Platforms Using Multimodal AI

Several major platforms now offer multimodal AI capabilities out of the box:

These platforms power everything, from product recommendations and visual discovery to voice-powered search assistants and context-aware chatbots.

Why This Matters for Marketers

Understanding how multimodal AI search isn’t just technical trivia. This context is key to preparing your content for how users actually search today. AI isn’t just indexing your blog post; it’s evaluating your product imagery, your FAQ, overall tone of voice, and your video transcripts, all in one pass.

The takeaway? Your brand needs to speak AI’s language across every format, not just text.

Why Multimodal Search Is Growing

Multimodal search isn’t just about flashy AI demos. It’s a direct response to how people naturally interact with the world using text, visuals, voice, and motion together. As AI catches up with human communication patterns, tech companies are racing to build search tools that feel more intuitive, accurate, and versatile.

Here are the key forces driving the rise of multimodal search:

1. Shifting User Behavior and Expectations

Consumers no longer want to adjust how they search to the platform they’re using; they want to search how they think. That means moving beyond the search bar (RIP).

- Visual-first platforms like TikTok, Pinterest, and Instagram are influencing how people browse and discover.

- Tools like Google Lens and Pinterest Lens have normalized using photos to search instead of words.

- Voice assistants (Alexa, Siri, Google Assistant) have trained users to search conversationally.

- Mobile-native behaviors (like snapping a picture and asking a question) are becoming standard, especially among younger demographics (hi, Gen Z!).

Users aren’t choosing “text vs. image” anymore. They expect search engines to handle all formats, seamlessly.

2. Rapid Advances in Multimodal AI Models

Behind the scenes, AI has gotten exponentially better at understanding and connecting different types of data.

- Models like CLIP and Titan Multimodal Embeddings can map images, text, and even audio to the same vector space.

- Tools like Vertex AI, Azure AI Search, and Amazon Bedrock offer plug-and-play access to multimodal capabilities.

- LLMs like GPT-4o and Gemini 1.5 (and the billions of others following in their footsteps) can process and respond to text, image, and voice in one seamless interaction.

These breakthroughs make it viable for companies to deploy multimodal search experiences at scale; for customers and internal teams alike.

3. eCommerce & Visual Discovery Demand It

Multimodal search is especially powerful in commerce, where product discovery often starts with a visual cue rather than a keyword.

- “Shop the look” features let users click on a photo to find similar products.

- Visual similarity search helps users discover items they can’t easily describe with text.

- Voice-to-visual tools allow shoppers to say, “Find chairs like this one, but in blue,” and get immediate results.

As digital shopping becomes more immersive, multimodal search enables experiences that convert better and feel more human.

4. Enterprise Use Cases Are Expanding

Beyond retail, enterprises are adopting multimodal search for internal and external applications:

- Healthcare: Searching across medical images and patient notes.

- Security: Monitoring via video, text logs, and audio feeds simultaneously.

- Content teams: Surfacing relevant assets (slides, PDFs, videos) across formats using AI internal search.

As AI becomes a core productivity layer, multimodal search is increasingly seen as essential for retrieving the right information in context, not just searching databases.

5. Search Engine Strategy Is Evolving

Tech giants are repositioning search itself to be multimodal by default:

- Google’s AI Overview (AIO) pulls in content from text, images, video, and user behavior signals.

- OpenAI’s ChatGPT (with image and voice input) is already reshaping how people interact with knowledge and brands.

- Amazon and Microsoft are integrating multimodal models into their search stacks and consumer products.

In this landscape, being discoverable means being multimodal, and brands that don’t adapt are falling behind.

The Bottom Line

Multimodal search is growing because it’s a natural extension of how people think and communicate, finally made scalable by advances in AI. For marketers and business leaders, this shift represents more than a tech trend. Multimodal search is a fundamental change in how your audience finds, engages with, and evaluates your brand.

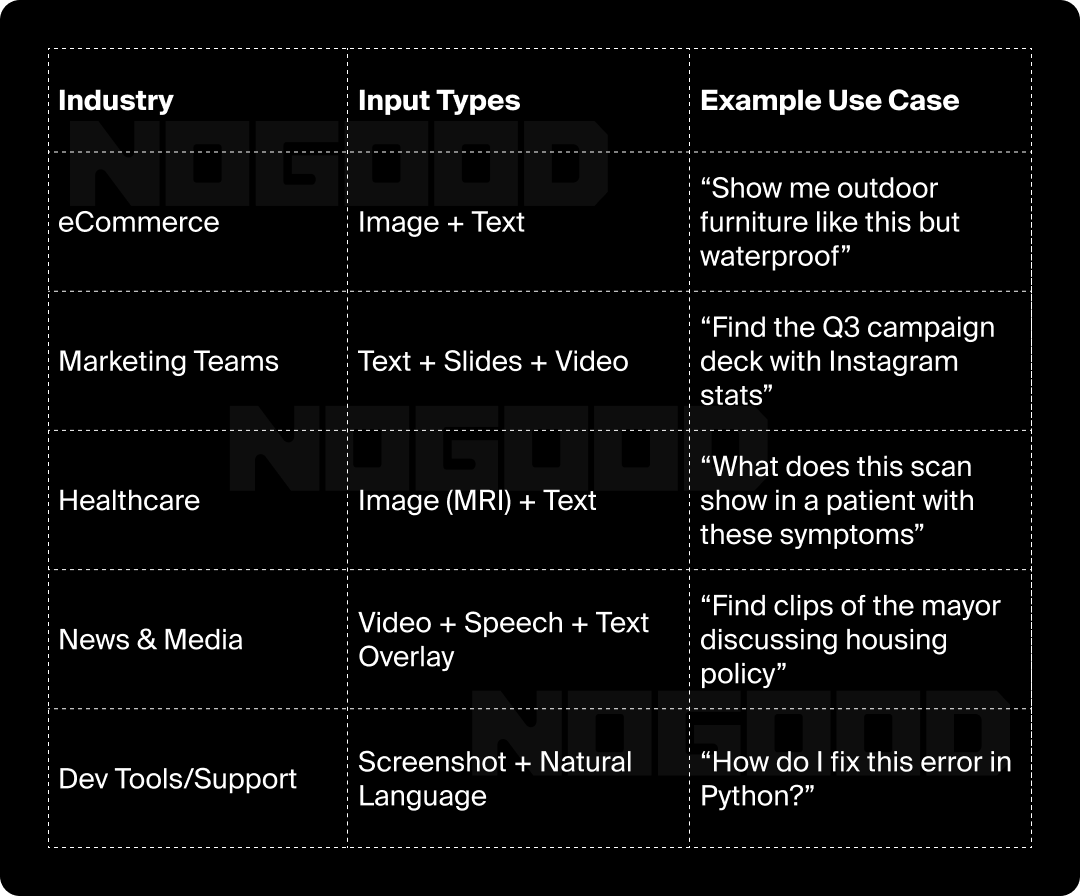

Multimodal Search in Action: Real-World Use Cases

Multimodal search is powering the experiences people use every day. From helping shoppers find products they can’t describe (been there), to enabling professionals to surface critical insights across formats, multimodal search unlocks new levels of usability, accuracy, and engagement.

Here are some real-world examples across verticals:

eCommerce: “Find me something like this”

Platforms like Amazon, ASOS, and IKEA use image-based search to help users discover products that match visual preferences, even if they don’t know the right keywords.

- A user uploads a photo of a chair, and the engine suggests visually similar items in the same price range.

- Some systems even pair image + text input (e.g., “like this lamp, but shorter and cheaper”) to return better results.

Why it matters: Multimodal search lowers friction in the path to purchase, turning inspiration into conversion.

Content Management: Search Across Formats

Enterprise teams can adopt a multimodal search approach to improve internal productivity and surface relevant assets across massive content libraries.

Example:

A marketing manager types “slides from last year’s Q4 campaign with influencer quotes,” and the system returns:

- A PowerPoint deck

- A video snippet from a webinar

- Screenshots from an influencer’s Instagram story

- A copy doc in Google Drive

Why it matters: Teams no longer need to remember file names or exact phrasing; contextual discovery becomes possible.

Healthcare: Smarter Diagnostic Support

Hospitals and research institutions are using multimodal AI to search across patient records, lab results, and medical imagery.

- A doctor can input a symptom and an MRI scan, and the system cross-references both to suggest potential diagnoses or related case studies.

- Systems like Google DeepMind’s MedPaLM are training models on image + text to support diagnosis across modalities.

Why it matters: For high-stakes industries like medicine, multimodal search enables faster, more comprehensive decision-making.

Media & Entertainment: Search Inside Video & Audio

Newsrooms and video platforms are beginning to roll out multimodal search that can parse spoken word, visual content, and on-screen text.

Example:

A journalist researching a political figure can type: “clips of [X] talking about climate change,” and get:

- Timestamps in video interviews where the topic was mentioned

- Transcripts from podcasts

- Still frames with detected text about climate policy

Why it matters: Search becomes semantic, not surface-level, surfacing moments and insights buried deep inside multimedia.

Developer & Knowledge Bases: Natural Language + Screenshots

Technical teams are using multimodal tools to improve help desks, documentation, and developer onboarding.

Example:

A user uploads a screenshot of a confusing error message and types: “how do I fix this in React?”

The engine parses the image, extracts the message, maps it to known issues in the documentation, and delivers step-by-step help.

Why it matters: When users can’t articulate what’s wrong, multimodal search bridges the gap between what they see and what they need.

Key Takeaway

Multimodal search is already shaping the way people find and interact with content, whether they’re shopping, researching, or troubleshooting. It removes the bottleneck of needing “perfect words” and enables more natural, frictionless discovery.

How Multimodal Search Impacts SEO & Marketing

The way people search, discover, and engage with content is changing. For marketers, that means adapting strategies to stay visible across text, image, voice, and video inputs, not just the traditional SERP.

SEO: New Ranking Signals & Discovery Paths

Multimodal inputs (like voice + image or image + text) mean users are searching in more natural, contextual ways. This disrupts how content is ranked and surfaced.

What changes:

- Visual content and metadata become ranking signals (e.g., alt text, image captions, visual layout)

- Voice inputs favor conversational, long-tail phrasing (hence why there’s so much synergy between AI search and voice search)

- AI search engines like Perplexity and ChatGPT generate responses from semantically relevant content, not just keyword-targeted pages.

What to do:

- Prioritize structured data and descriptive labeling across all content formats

- Optimize for entities, not just keywords

- Treat every asset (image, chart, quote) as searchable content

Content Strategy: Format-Agnostic Planning Becomes Essential

Multimodal search engines don’t care if your content is a blog, video, infographic, or podcast. They care whether it answers the query clearly and completely.

What changes:

- Users might ask a question with an image or voice prompt and get a response pulled from a transcript, infographic, or code snippet

- One topic can (and should) be covered across multiple content types

What to do:

- Build flexible content hubs that include video, text, image, and audio assets

- Repurpose high-performing content into multimodal formats (e.g., turn a guide into a narrated slideshow, or a stat into a chart)

- Use descriptive metadata and cross-format tagging to improve discoverability

Brand Marketing: Visibility Beyond the SERP

As AI search platforms (ChatGPT, Gemini, Perplexity) become discovery engines, brand visibility happens outside traditional search engines, and without branded queries.

What changes:

- Users ask AI, “What are the best tools for influencer marketing?” or “What do I need for a gaming setup?”

- If your brand isn’t referenced in LLM training data, FAQs, or topically authoritative content, it won’t appear

What to do:

- Publish educational, source-worthy content in formats AI can crawl and interpret

- Ensure brand mentions exist in structured formats across the web (reviews, listicles, comparisons)

- Optimize for Answer Engine Optimization (AEO), also referred to as GEO for generative engine optimization, not just SEO

The Bottom Line

Multimodal search requires marketers to optimize the experience, not just the page. That means creating content that’s semantically rich, visually accessible, and usable across search types. The winners in this new era aren’t the best keyword optimizers; they’re the most helpful, most visible, and most adaptable brands.

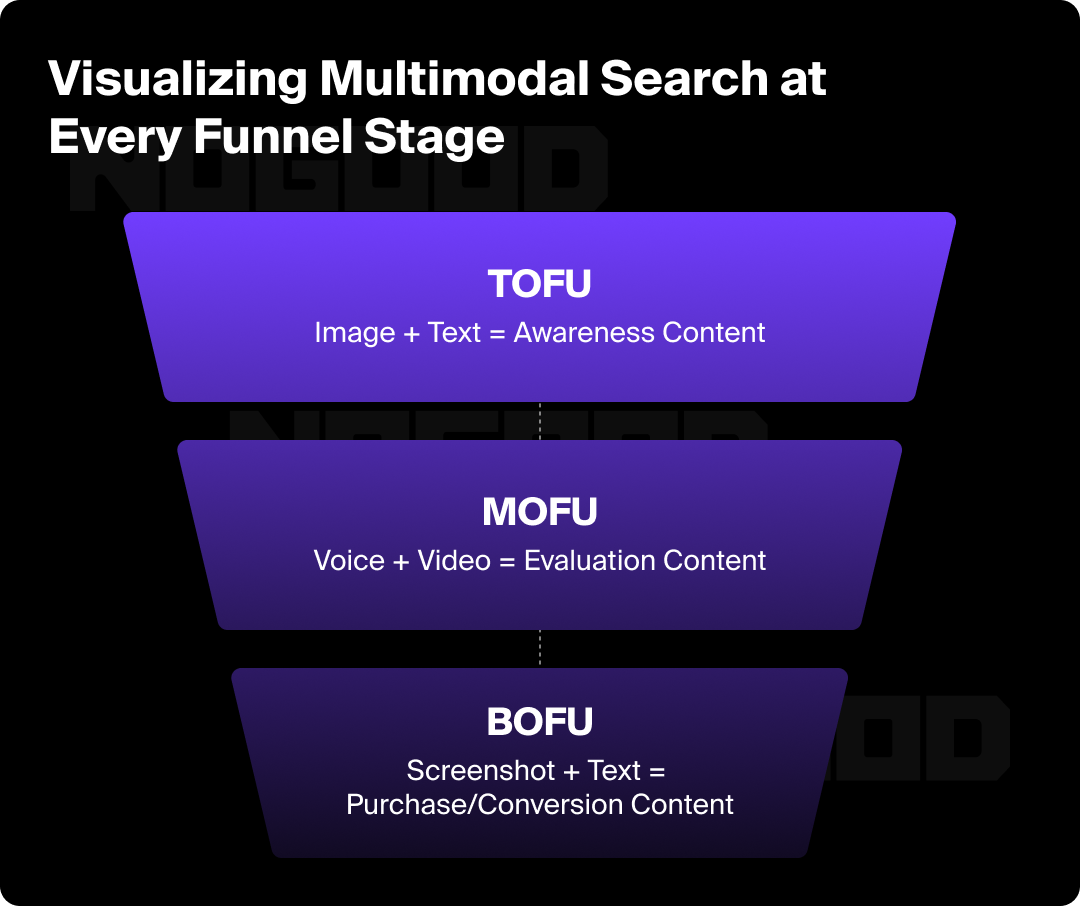

The Marketing Funnel & Multimodal Search

Multimodal search doesn’t just change how people search; it changes when and why they engage with content across the marketing funnel. From awareness to conversion, people are now using different input types (voice, images, screenshots, hybrid prompts) to get answers, discover brands, and make decisions.

Here’s how multimodal search shows up at each stage of the funnel:

Top-of-Funnel (Awareness)

Search Behavior:

Users ask broad, exploratory questions with multimodal inputs, “What’s this?” with an image, “Best headsets for beginners” via voice, or “Budget-friendly alternatives” as a follow-up to a screenshot.

Marketing Opportunities:

- Visual-First Content: Blog posts with comparison charts, alt text-rich product images, and captioned videos rank better in visual + text hybrid queries.

- Conversational Answers: Use FAQ formats and natural language to surface in voice-based or AI-generated responses.

- Entity Alignment: Make sure your brand is associated with relevant categories and topics (not just keywords) to be pulled into AI search summaries.

Middle-of-Funnel (Consideration)

Search Behavior:

Queries become more specific and evaluative, “Compare X vs Y,” “Reviews of [product in photo],” or “How does this work?” with a screenshot or voice input.

Marketing Opportunities:

- Multiformat Content Hubs: Offer side-by-side comparisons, video explainers, user-generated content, and technical specs—all with structured data.

- Schema-Rich Pages: Use structured data like Review, Product, and HowTo schema to appear across input types and surfaces.

- Brand Positioning in AI Search: Contribute content to forums, UGC platforms, and editorial mentions that LLMs crawl.

Bottom-of-Funnel (Conversion)

Search Behavior:

Users are now asking context-specific or intent-driven questions with urgency: “Where to buy this,” “Cheapest alternative to [product],” or “Does [brand] support this feature?”, sometimes initiated by visual inputs like screenshots from social media.

Marketing Opportunities:

- Local + Visual Optimization: Ensure store locations, images, and product availability are up to date and tagged properly.

- AI-Ready Content: Build conversion pages with clear, concise answers to “best for,” “buy now,” or “trusted by” prompts.

- Semantic CTAs: Embed action-focused language that LLMs can interpret clearly, e.g., “Start a free trial,” “Buy direct,” “Ships today.”

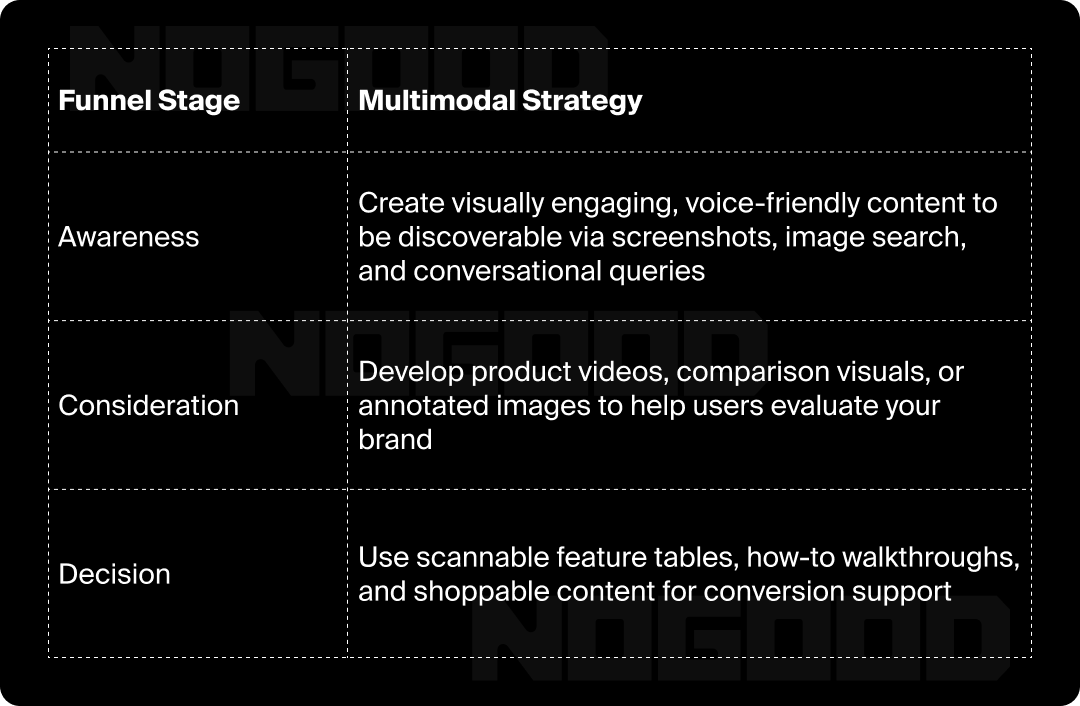

Check out the graphic below for a quick rundown.

How to Optimize for Multimodal Search

As multimodal search demands it, content and assets have to work together across visual, voice, text, and beyond. Here’s how you can future-proof your content strategy:

1. Structure Content for Multimodal Inputs

Modern AI models don’t just read content; they parse, segment, and understand it across modalities. Structuring your content makes it easier for them to interpret and surface your assets accurately.

- Use Semantic HTML: Headers (<h2>, <h3>), bullet points, and clear sectioning to support layout-aware search engines and make your content LLM engine-friendly.

- Add Descriptive Alt Text: Go beyond “image of product.” Include context, category, and relevant adjectives: “Wireless RGB gaming keyboard with macro keys.”

- Use Image & Video Schema Markup: Help engines understand what your media is about and how it connects to the on-page content.

2. Make Content Embedding-Friendly

AI search engines and LLMs rely heavily on embeddings (mathematical representations of meaning) to understand and retrieve relevant content. Your job? Make sure your content is clear and coherent enough to be embedded effectively.

- Use crisp, standalone paragraphs rather than burying insights in long walls of text.

- Avoid fluff or jargon that dilutes meaning.

- Answer specific questions directly to improve retrieval accuracy in AI-driven results.

Pro Tip: Tools like ChatGPT, Perplexity, and Gemini are more likely to surface your content if it contains direct, well-structured responses to common queries.

3. Optimize for Visual & Voice Search

Multimodal inputs often start with a photo or spoken prompt. That means your assets and copy need to hold up across non-text inputs.

- Label Images Meaningfully: File names like IMG_7283.jpg won’t cut it. Use names like nogood-multimodal-marketing-funnel.png.

- Add Contextual Captions: Include use cases and comparisons (“ideal for competitive FPS players”).

- Write Naturally: Voice search queries tend to be conversational; answer in plain language.

4. Choose the Right Hosting & Channels

If your images or videos aren’t crawlable, indexable, or embeddable, they won’t show up in multimodal results.

- Host media on SEO-friendly platforms like YouTube or Vimeo. Self-hosted content with structured data is a safer bet than Instagram embeds or proprietary players.

- Avoid hiding key visuals behind carousels or JavaScript.

- Ensure transcripts are available for all videos and audio content.

5. Link Your Modalities Through Context

Multimodal search engines thrive when they can connect the dots across media. Help them out by tying everything together.

- Use internal links between articles, product pages, and media.

- Mention visuals explicitly in your copy (“As shown in the image below…”).

- Tag consistently across platforms (product name, audience, use case).

Think like a machine (but one pretending it’s human): The more context and semantic relationships you provide, the more likely your content is to rank, no matter the input format.

Multimodal Search Engines & Tools to Know

The multimodal search ecosystem is evolving fast, and new platforms are emerging that process and rank content based on more than just text. Here are the major players and tools marketers should keep on their radar, both for discovery and for optimization.

AI Multimodal Search Engines

These platforms process multiple inputs (like text, image, video, and voice) to surface content in smarter, context-rich ways:

1. Google Multisearch

- What It Is: Google’s Lens-powered search lets users combine images with text queries (e.g., snapping a picture of a chair and adding “in green”).

- Why It Matters: Visual content like product photos and infographics can now rank alongside text-based results.

- Key Takeaway: Optimize image metadata, captions, and schema markup to surface in Google Multisearch.

2. Perplexity AI

- What It Is: A conversational AI search engine that blends text, voice, and visual retrieval.

- Why It Matters: Perplexity is building toward multimodal contextual answers, citing sources directly from websites.

- Key Takeaway: Structure content clearly and build authority; your site could be cited in AI-generated summaries.

3. ChatGPT With Vision (Pro Version)

- What It Is: Users can upload images and ask ChatGPT to interpret or search based on them.

- Why It Matters: Visual search behavior is shifting toward AI platforms, not just search engines.

- Key Takeaway: Create embeddable, clear, and well-labeled media assets that LLMs can interpret and reference.

4. Bing Copilot & Edge Sidebar

- What It Is: Microsoft’s AI-enhanced search and assistant can analyze webpages, images, and user prompts in real time.

- Why It Matters: Copilot can visually analyze websites and respond with embedded answers, making your content part of a dynamic AI experience.

- Key Takeaway: Focus on clarity, structure, and relevance to be pulled into Copilot responses.

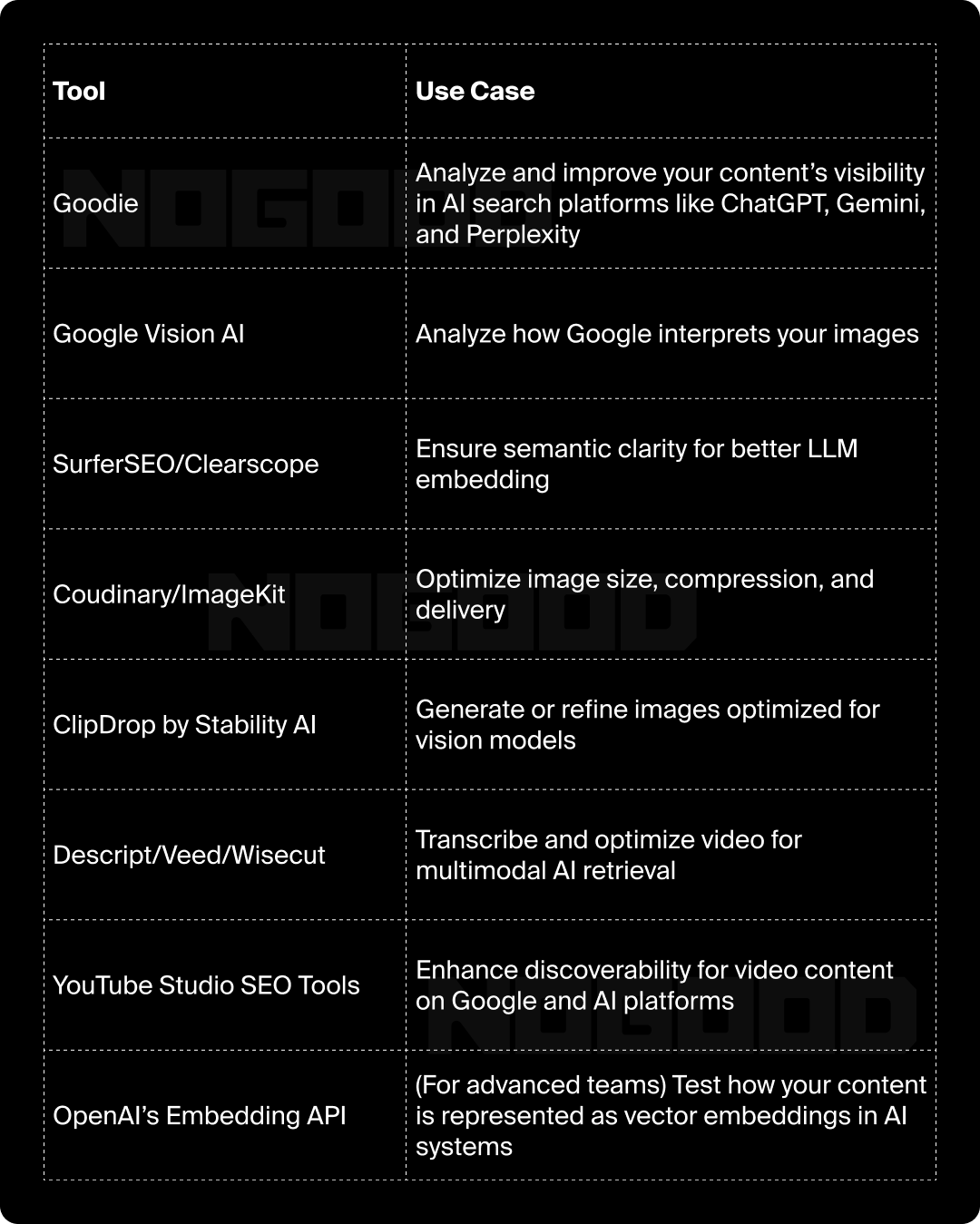

Multimodal Optimization Tools

Check out these tools that help marketers prepare their content for success in a multimodal search environment:

TL;DR: Focus Your Efforts Where AI Is Headed

Multimodal search marks a shift in how people discover and interact with information. By understanding the key platforms and tools now, you can create content that’s discoverable no matter how a user starts their search: by typing, snapping, speaking, or scrolling.

Building a Multimodal-Ready Content Strategy

Instead of thinking in formats (blog post, infographic, video), think in inputs (voice, vision, text) and contexts (what a user is doing, asking, or needing). To stay visible, your content strategy needs to evolve from single-mode publishing to multimodal, modular storytelling.

Here’s how to build a future-proof strategy:

1. Prioritize Media-Rich Formats

Search isn’t text-only. Screenshots, product photos, charts, and how-to videos can now become the query. That means your content should be:

- Image-First: Use labeled diagrams, step-by-step screenshots, and original graphics with clear alt text

- Video-Native: Include short explainers, demos, and tutorials that surface in AI-powered video recommendations (like YouTube, Perplexity, or TikTok search)

- Visually Intentional: Design your visuals not just to illustrate, but to be discovered

Think of every image or video you publish as a possible search result.

2. Write for Voice, Design for Vision

Multimodal search means different modes of query, and your content should be legible across them all:

- Voice: Use conversational headings and FAQs that sound natural when read aloud

- Vision: Include visual cues like overlays, captions, and context in your images and videos

- Text: Maintain strong semantic structure using clear subheadings, bullets, and schema markup to support LLMs

3. Think Modular, Not Just Longform

Rather than relying on a single blog post to rank, break your content into modular, AI-friendly blocks:

- Key takeaways that can stand alone

- Summarized steps or feature breakdowns

- Visuals that make sense out of context

This supports how LLMs extract and repackage content for AI Overviews, chat interfaces, and voice assistants.

4. Plan for AI-Driven Discovery Across Channels

AI search is fragmented—your content might show up in:

- Google’s AI Overview (text + image)

- Perplexity (source citations + visual cards)

- TikTok’s search tab (video clips with transcripts)

- ChatGPT or Gemini (chat-summarized responses)

Your strategy should map content types to where and how they might appear. That means:

- Video that’s optimized for vertical search

- Annotated screenshots that explain product UI

- FAQs and summaries built for instant answers

5. Align With the Multimodal Funnel

Use multimodal content to support every stage of the funnel:

By building a multimodal-ready strategy, you’re not just keeping up with search innovation; you’re building durable visibility across platforms, surfaces, and customer journeys. Think in layers, optimize for inputs, and let your content do more than just sit on a page.

The Future of Multimodal Search

Multimodal search is a foundation for how people will interact with information going forward. As generative AI, computer vision, and natural language processing continue to converge, search is less about keywords and more about contextual understanding. Here’s what that means for the road ahead:

From Queries to Moments

In the future, users won’t need to know what to type; they’ll just show, ask, or point. Whether it’s a photo of a broken part, a voice question mid-task, or a scribbled note, search engines will increasingly interpret multimodal moments in real time.

Implication for marketers: Start creating content that anticipates the user’s situation, not just their query. Build resources that are helpful when someone is mid-project, hands-free, or visual-first.

Search Gets More Conversational & Visual

Chat interfaces, image inputs, and dynamic results are becoming standard. AI models are shifting from “10 blue links” to response engines that synthesize answers from various inputs.

Implication: Your content must be optimized for extraction and summarization, not just ranking. Think schema markup, tightly structured sections, visual aids, and strong citations.

LLMs Will Power More of the Experience

Search engines are increasingly relying on large language models (LLMs) and multimodal foundation models (like GPT-4o, Gemini, and Claude) to deliver answers. These models don’t just parse content, they interpret and generate.

Implication: The more semantically rich, structured, and helpful your content is, the more likely it is to be used as a trusted source in AI outputs.

Cross-Platform Search Will Be Seamless

Google, TikTok, Perplexity, Pinterest, Amazon, and ChatGPT will all evolve their own multimodal ecosystems, but users won’t think about them as separate tools. They’ll expect the same smart, intuitive results everywhere.

Implication: Your content needs to travel. Repurpose visuals, rewrite summaries, and design for channel-specific discovery (YouTube vs. Perplexity vs. Google Lens).

Brands Will Compete on Intent Matching

As AI gets better at interpreting visual, vocal, and contextual inputs, the winners in search won’t just be the most authoritative; they’ll be the most relevant in the moment.

Implication: Investing in Answer Engine Optimization (AEO), visual SEO, and intent-driven UX will become essential to showing up in multimodal environments.

Winning in a Multimodal Search World

The rise of multimodal search marks a fundamental shift in how people discover, learn, and buy online. For marketers, this isn’t just an SEO evolution; it’s a strategic inflection point.

To stay relevant, your content needs to be:

- Visually rich

- Contextually helpful

- Structured for AI understanding

- Designed for intent, not just keywords

The brands that will win in this new landscape are those that meet users where they are, whether that’s in a voice command, a camera roll, or a chatbot window.

The future of search is fluid, visual, and voice-activated. Don’t just optimize for it, design your entire strategy around it.